NLU Version 4.0.0

OCR Visual Tables into Pandas DataFrames from PDF/DOC(X)/PPT files, 1000+ new state-of-the-art transformer models for Question Answering (QA) for over 30 languages, up to 700% speedup on GPU, 20 Biomedical models for over 8 languages, 50+ Terminology Code Mappers between RXNORM, NDC, UMLS,ICD10, ICDO, UMLS, SNOMED and MESH, Deidentification in Romanian, various Spark NLP helper methods and much more in 1 line of code with John Snow Labs NLU 4.0.0

NLU 4.0 for OCR Overview

On the OCR side, we now support extracting tables from PDF/DOC(X)/PPT files into structured pandas dataframe, making it easier than ever before to analyze bulks of files visually!

Checkout the OCR Tutorial for extracting Tables from Image/PDF/DOC(X) files to see this in action

These models grab all Table data from the files detected and return a list of Pandas DataFrames,

containing Pandas DataFrame for every table detected

| NLU Spell | Transformer Class |

|---|---|

nlu.load(pdf2table) |

PdfToTextTable |

nlu.load(ppt2table) |

PptToTextTable |

nlu.load(doc2table) |

DocToTextTable |

This is powerd by John Snow Labs Spark OCR Annotataors for PdfToTextTable, DocToTextTable, PptToTextTable

NLU 4.0 Core Overview

-

On the NLU core side we have over 1000+ new state-of-the-art models in over 30 languages for modern extractive transformer-based Question Answering problems powerd by the ALBERT/BERT/DistilBERT/DeBERTa/RoBERTa/Longformer Spark NLP Annotators trained on various SQUAD-like QA datasets for domains like Twitter, Tech, News, Biomedical COVID-19 and in various model subflavors like sci_bert, electra, mini_lm, covid_bert, bio_bert, indo_bert, muril, sapbert, bioformer, link_bert, mac_bert

-

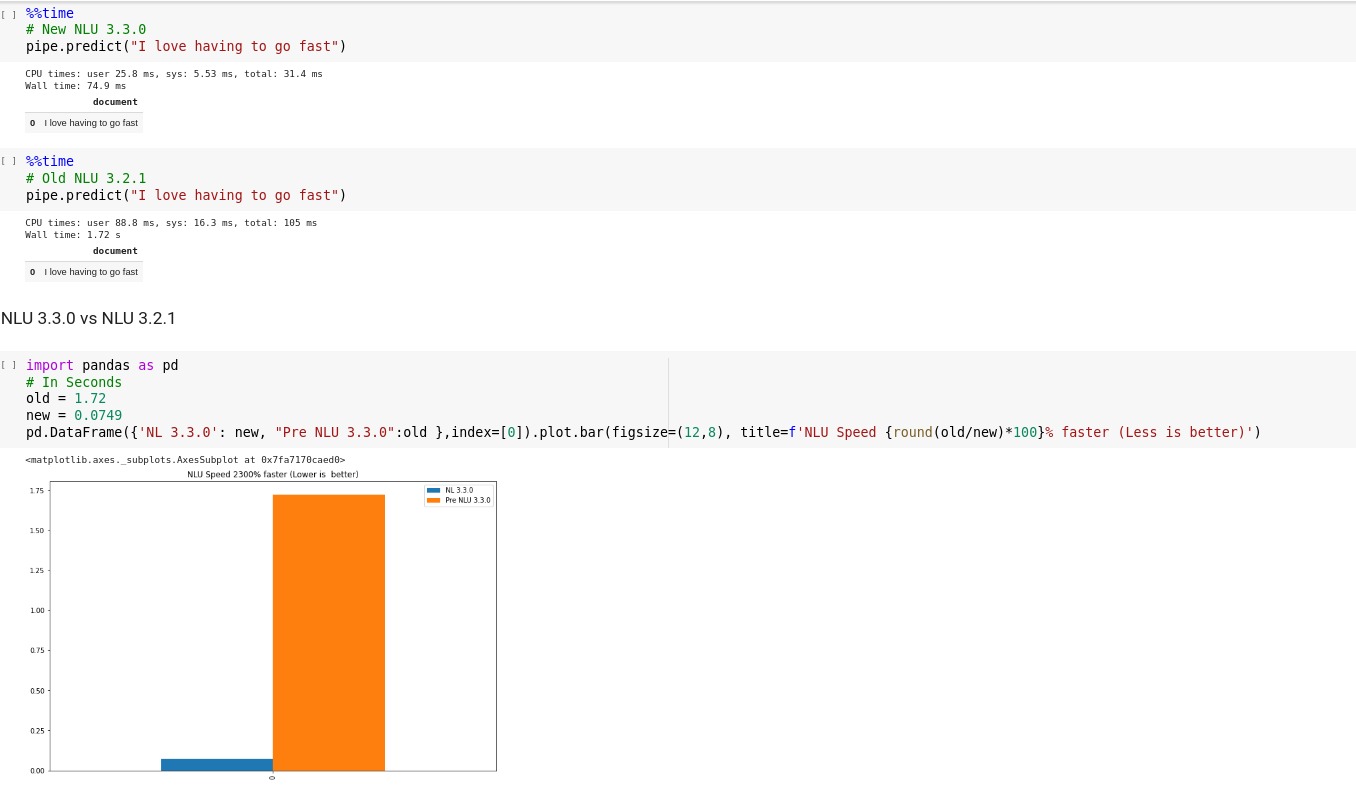

Additionally up to 700% speedup transformer-based Word Embeddings on GPU and up to 97% speedup on CPU for tensorflow operations, support for Apple M1 chips, Pyspark 3.2 and 3.3 support. Ontop of this, we are now supporting Apple M1 based architectures and every Pyspark 3.X version, while deprecating support for Pyspark 2.X.

-

Finally, NLU-Core features various new helper methods for working with Spark NLP and embellishes now the entire universe of Annotators defined by Spark NLP and Spark NLP for healthcare.

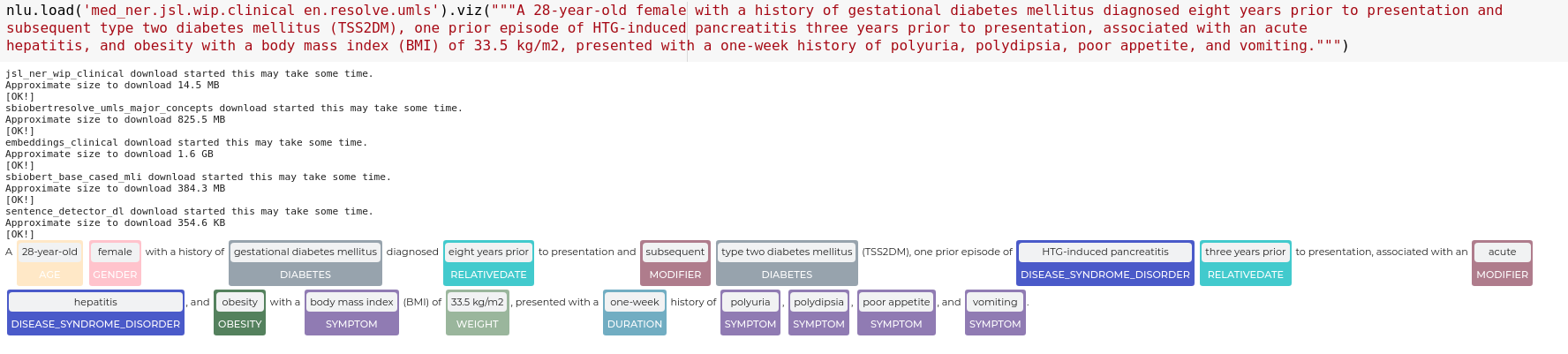

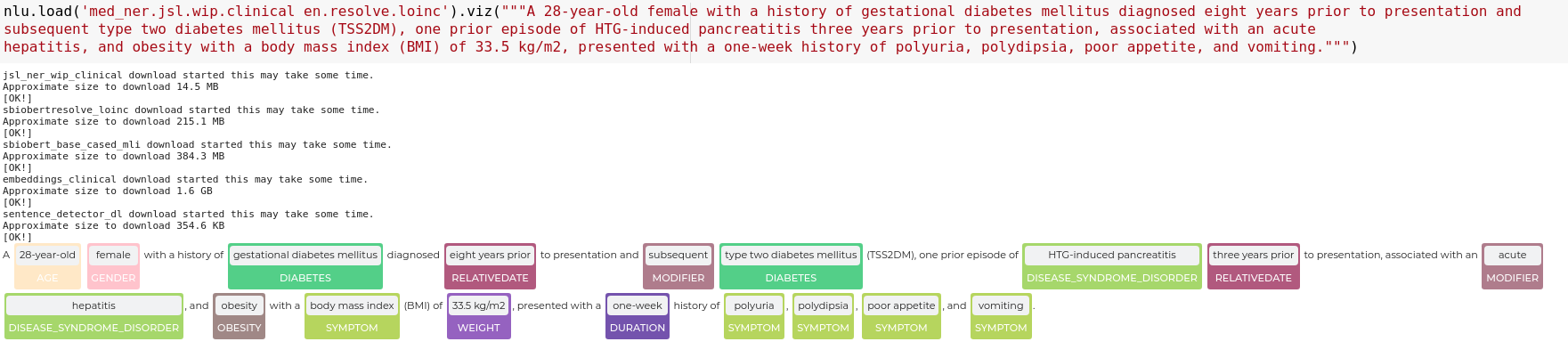

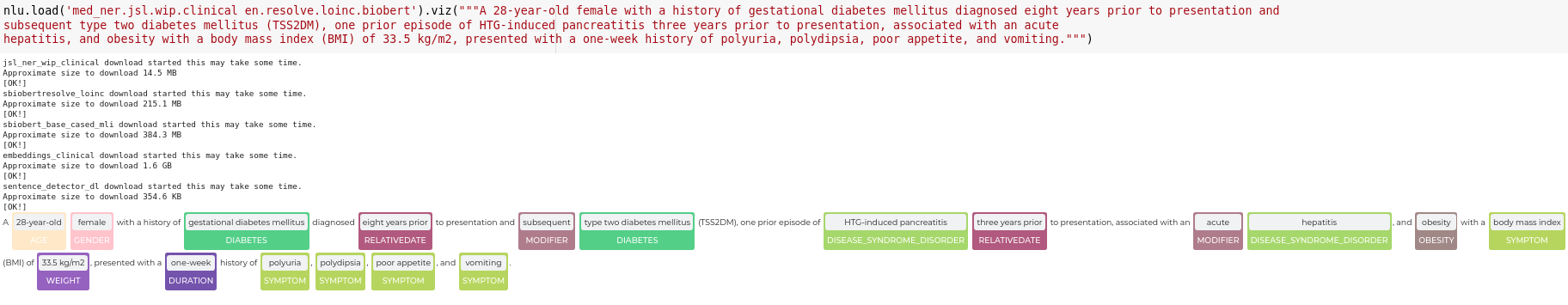

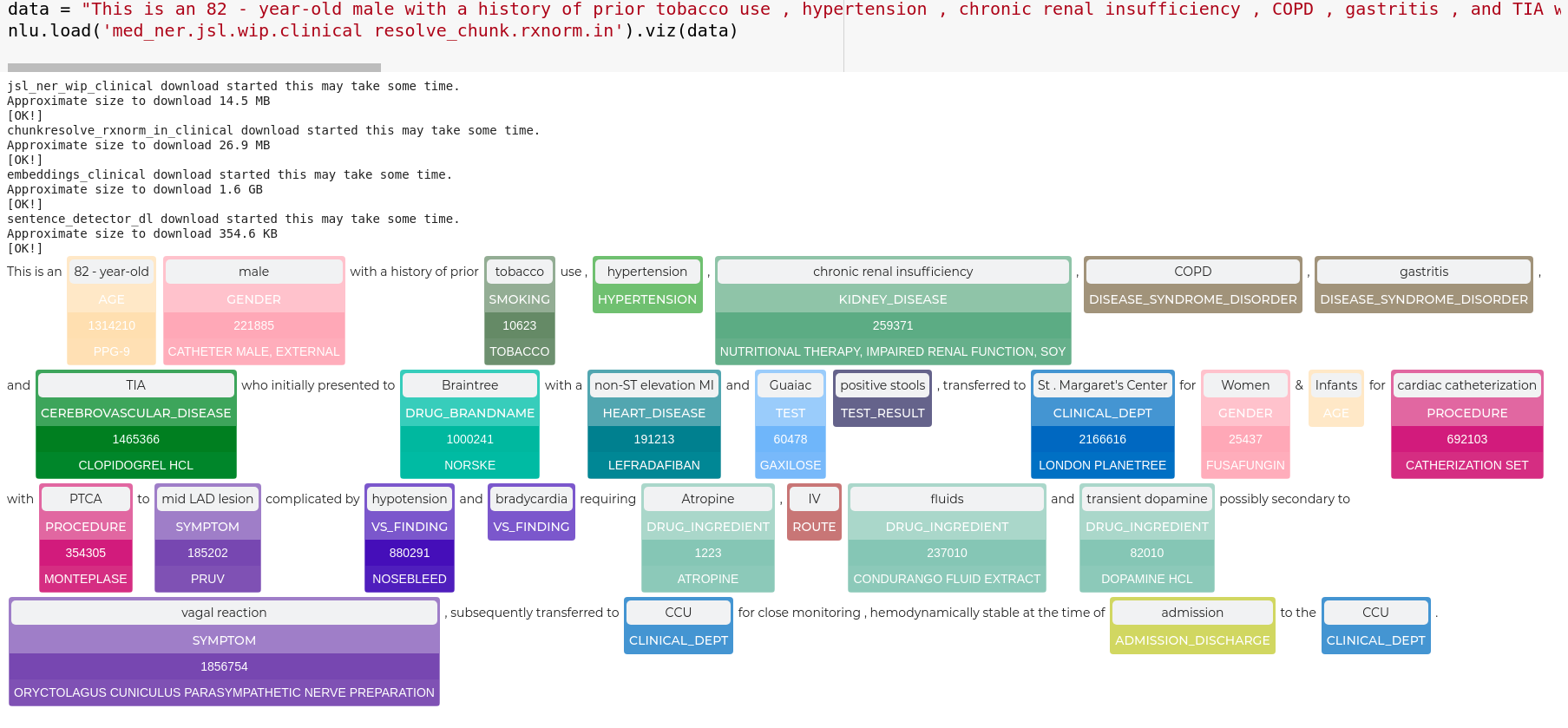

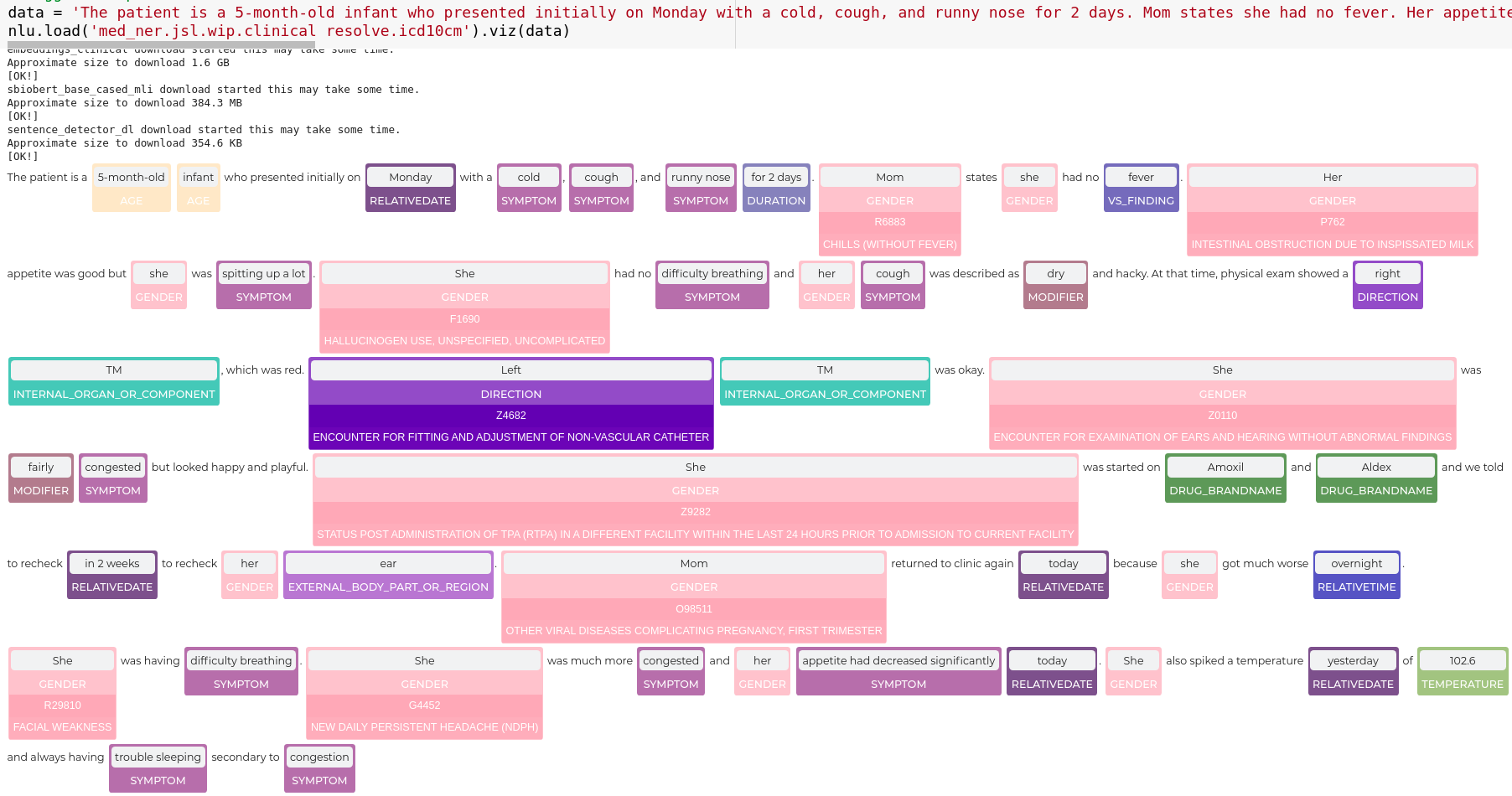

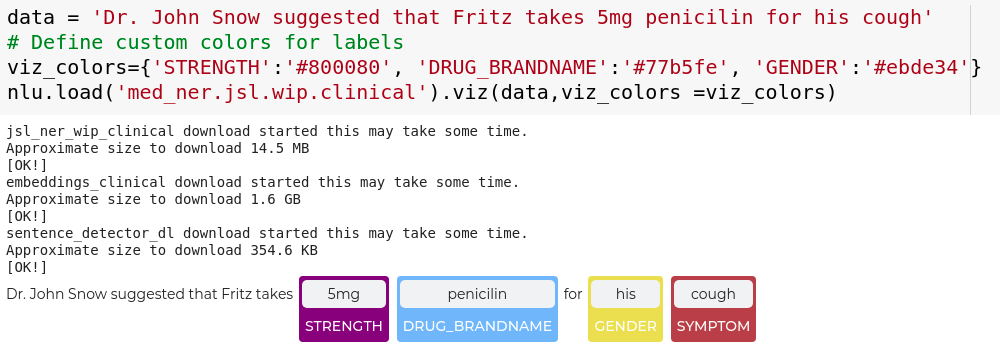

NLU 4.0 for Healthcare Overview

-

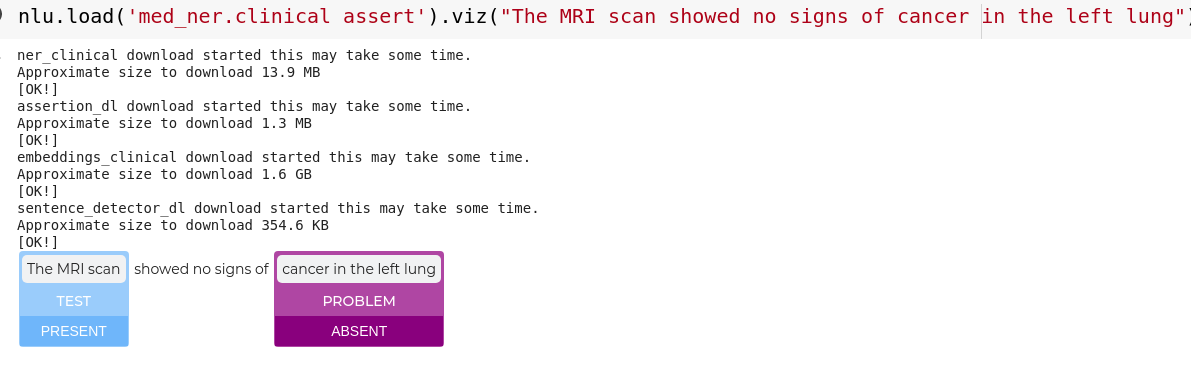

On the healthcare side NLU features 20 Biomedical models for over 8 languages (English, French, Italian, Portuguese, Romanian, Catalan and Galician) detect entities like

HUMANandSPECIESbased on LivingNER corpus -

Romanian models for Deidentification and extracting Medical entities like

Measurements,Form,Symptom,Route,Procedure,Disease_Syndrome_Disorder,Score,Drug_Ingredient,Pulse,Frequency,Date,Body_Part,Drug_Brand_Name,Time,Direction,Dosage,Medical_Device,Imaging_Technique,Test,Imaging_Findings,Imaging_Test,Test_Result,Weight,Clinical_DeptandUnitswith SPELL and SPELL respectively -

English NER models for parsing entities in Clinical Trial Abstracts like

Age,AllocationRatio,Author,BioAndMedicalUnit,CTAnalysisApproach,CTDesign,Confidence,Country,DisorderOrSyndrome,DoseValue,Drug,DrugTime,Duration,Journal,NumberPatients,PMID,PValue,PercentagePatients,PublicationYear,TimePoint,Valueusingen.med_ner.clinical_trials_abstracts.pipeand also Pathogen NER models forPathogen,MedicalCondition,Medicinewithen.med_ner.pathogenandGENE_PROTEINwithen.med_ner.biomedical_bc2gm.pipeline -

First Public Health Model for Emotional Stress classification It is a PHS-BERT-based model and trained with the Dreaddit dataset using

en.classify.stress -

50 + new Entity Mappers for problems like :

- Extract section headers in scientific articles and normalize them with

en.map_entity.section_headers_normalized - Map medical abbreviates to their definitions with

en.map_entity.abbreviation_to_definition - Map drugs to action and treatments with

en.map_entity.drug_to_action_treatment - Map drug brand to their National Drug Code (NDC) with

en.map_entity.drug_brand_to_ndc - Convert between terminologies using

en.<START_TERMINOLOGY>_to_<TARGET_TERMINOLOGY>- This works for the terminologies

rxnorm,ndc,umls,icd10cm,icdo,umls,snomed,meshsnomed_to_icdosnomed_to_icd10cmrxnorm_to_umls

- This works for the terminologies

- powerd by Spark NLP for Healthcares ChunkMapper Annotator

- Extract section headers in scientific articles and normalize them with

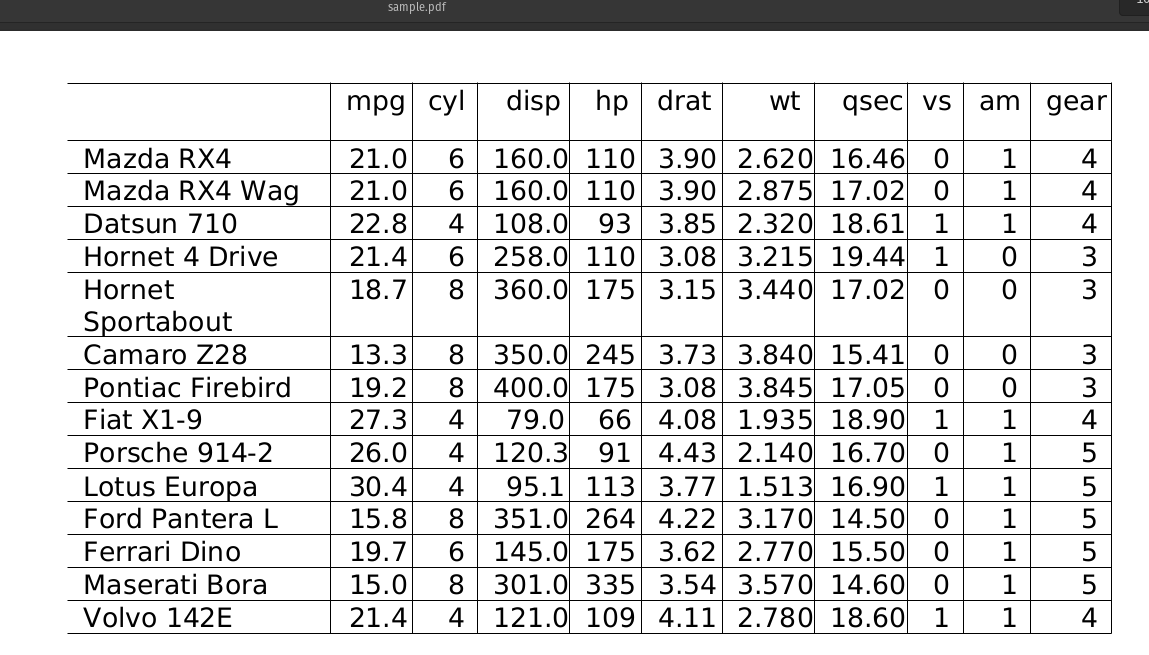

Extract Tables from PDF files as Pandas DataFrames

Sample PDF:

nlu.load('pdf2table').predict('/path/to/sample.pdf')

Output of PDF Table OCR :

| mpg | cyl | disp | hp | drat | wt | qsec | vs | am | gear |

|---|---|---|---|---|---|---|---|---|---|

| 21 | 6 | 160 | 110 | 3.9 | 2.62 | 16.46 | 0 | 1 | 4 |

| 21 | 6 | 160 | 110 | 3.9 | 2.875 | 17.02 | 0 | 1 | 4 |

| 22.8 | 4 | 108 | 93 | 3.85 | 2.32 | 18.61 | 1 | 1 | 4 |

| 21.4 | 6 | 258 | 110 | 3.08 | 3.215 | 19.44 | 1 | 0 | 3 |

| 18.7 | 8 | 360 | 175 | 3.15 | 3.44 | 17.02 | 0 | 0 | 3 |

| 13.3 | 8 | 350 | 245 | 3.73 | 3.84 | 15.41 | 0 | 0 | 3 |

| 19.2 | 8 | 400 | 175 | 3.08 | 3.845 | 17.05 | 0 | 0 | 3 |

| 27.3 | 4 | 79 | 66 | 4.08 | 1.935 | 18.9 | 1 | 1 | 4 |

| 26 | 4 | 120.3 | 91 | 4.43 | 2.14 | 16.7 | 0 | 1 | 5 |

| 30.4 | 4 | 95.1 | 113 | 3.77 | 1.513 | 16.9 | 1 | 1 | 5 |

| 15.8 | 8 | 351 | 264 | 4.22 | 3.17 | 14.5 | 0 | 1 | 5 |

| 19.7 | 6 | 145 | 175 | 3.62 | 2.77 | 15.5 | 0 | 1 | 5 |

| 15 | 8 | 301 | 335 | 3.54 | 3.57 | 14.6 | 0 | 1 | 5 |

| 21.4 | 4 | 121 | 109 | 4.11 | 2.78 | 18.6 | 1 | 1 | 4 |

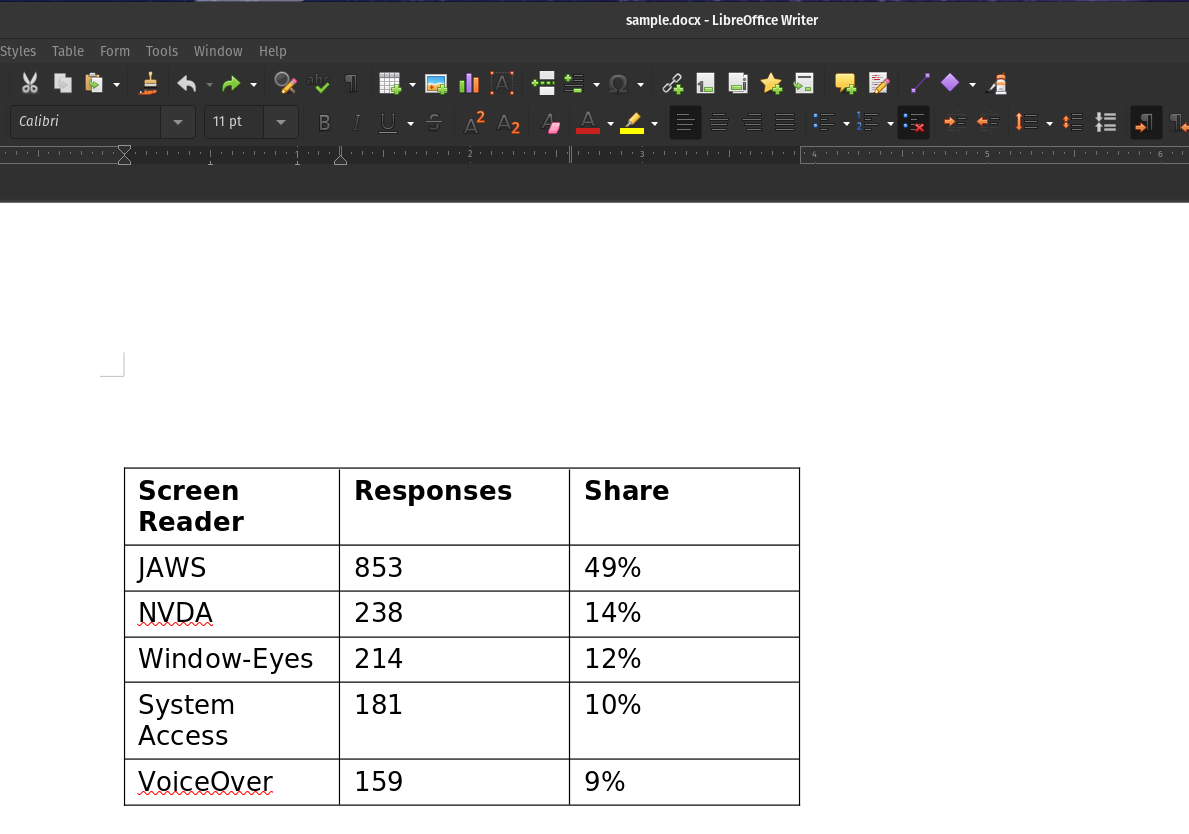

Extract Tables from DOC/DOCX files as Pandas DataFrames

Sample DOCX:

nlu.load('doc2table').predict('/path/to/sample.docx')

Output of DOCX Table OCR :

| Screen Reader | Responses | Share |

|---|---|---|

| JAWS | 853 | 49% |

| NVDA | 238 | 14% |

| Window-Eyes | 214 | 12% |

| System Access | 181 | 10% |

| VoiceOver | 159 | 9% |

Extract Tables from PPT files as Pandas DataFrame

Sample PPT with two tables:

nlu.load('ppt2table').predict('/path/to/sample.docx')

Output of PPT Table OCR :

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species |

|---|---|---|---|---|

| 5.1 | 3.5 | 1.4 | 0.2 | setosa |

| 4.9 | 3 | 1.4 | 0.2 | setosa |

| 4.7 | 3.2 | 1.3 | 0.2 | setosa |

| 4.6 | 3.1 | 1.5 | 0.2 | setosa |

| 5 | 3.6 | 1.4 | 0.2 | setosa |

| 5.4 | 3.9 | 1.7 | 0.4 | setosa |

and

| Sepal.Length | Sepal.Width | Petal.Length | Petal.Width | Species |

|---|---|---|---|---|

| 6.7 | 3.3 | 5.7 | 2.5 | virginica |

| 6.7 | 3 | 5.2 | 2.3 | virginica |

| 6.3 | 2.5 | 5 | 1.9 | virginica |

| 6.5 | 3 | 5.2 | 2 | virginica |

| 6.2 | 3.4 | 5.4 | 2.3 | virginica |

| 5.9 | 3 | 5.1 | 1.8 | virginica |

Span Classifiers for question answering

Albert, Bert, DeBerta, DistilBert, LongFormer, RoBerta, XlmRoBerta based Transformer Architectures are now avaiable for question answering with almost 1000 models avaiable for 35 unique languages powerd by their corrosponding Spark NLP XXXForQuestionAnswering Annotator Classes and in various tuning and dataset flavours.

<lang>.answer_question.<domain>.<datasets>.<annotator_class><tune info>.by_<username>

If multiple datasets or tune parameters are defined , they are connected with a _ .

These substrings define up the <domain> part of the NLU reference

- Legal cuad

- COVID 19 Biomedical biosaq

- Biomedical Literature pubmed

- Twitter tweet

- Wikipedia wiki

- News news

- Tech tech

These substrings define up the <dataset> part of the NLU reference

- Arabic SQUAD ARCD

- Turkish TQUAD

- German GermanQuad

- Indonesian AQG

- Korean KLUE, KORQUAD

- HindiCHAI

- Multi-LingualMLQA

- Multi-Lingualtydiqa

- Multi-Lingualxquad

These substrings define up the <dataset> part of the NLU reference

- Alternative Eval method reqa

- Synthetic Data synqa

- Benchmark / Eval Method ABSA-Bench roberta_absa

- Arabic architecture type soqaol

These substrings define the <annotator_class> substring, if it does not map to a sparknlp annotator

These substrings define the <tune_info> substring, if it does not map to a sparknlp annotator

- Train tweaks :

multilingual,mini_lm,xtremedistiled,distilled,xtreme,augmented,zero_shot - Size tweaks

xl,xxl,large,base,medium,base,small,tiny,cased,uncased - Dimension tweaks :

1024d,768d,512d,256d,128d,64d,32d

QA DataFormat

You need to use one of the Data formats below to pass context and question correctly to the model.

# use ||| to seperate question||context

data = 'What is my name?|||My name is Clara and I live in Berkeley'

# pass a tuple (question,context)

data = ('What is my name?','My name is Clara and I live in Berkeley')

# use pandas Dataframe, one column = question, one column=context

data = pd.DataFrame({

'question': ['What is my name?'],

'context': ["My name is Clara and I live in Berkely"]

})

# Get your answers with any of above formats

nlu.load("en.answer_question.squadv2.deberta").predict(data)

returns :

| answer | answer_confidence | context | question |

|---|---|---|---|

| Clara | 0.994931 | My name is Clara and I live in Berkely | What is my name? |

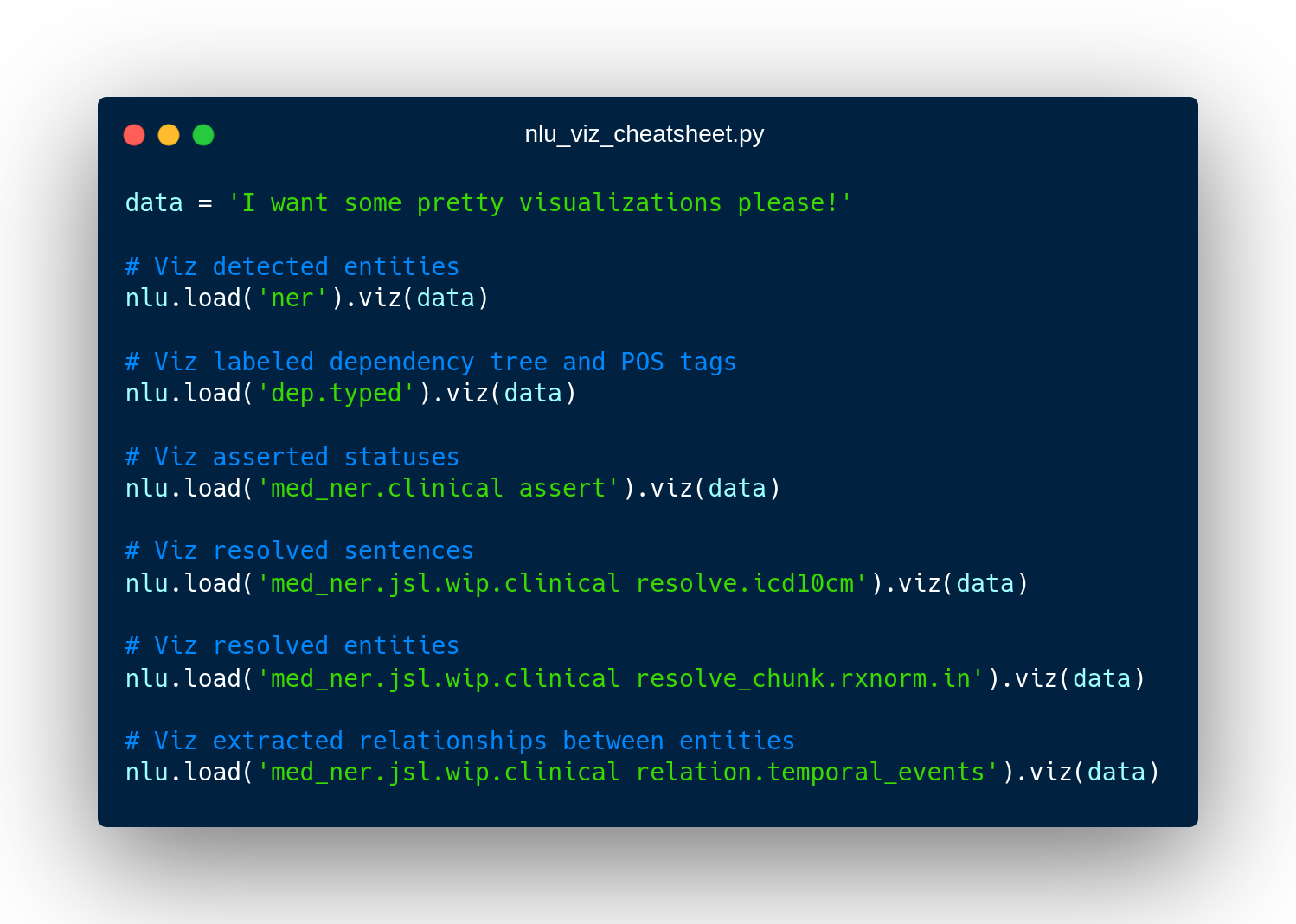

New NLU helper Methods

You can see all features showcased in the notebook or on the new docs page for Spark NLP utils

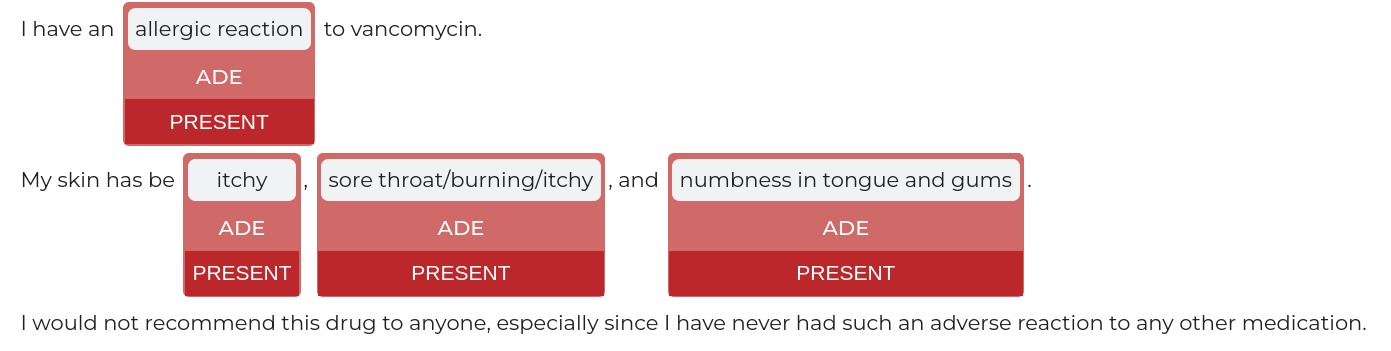

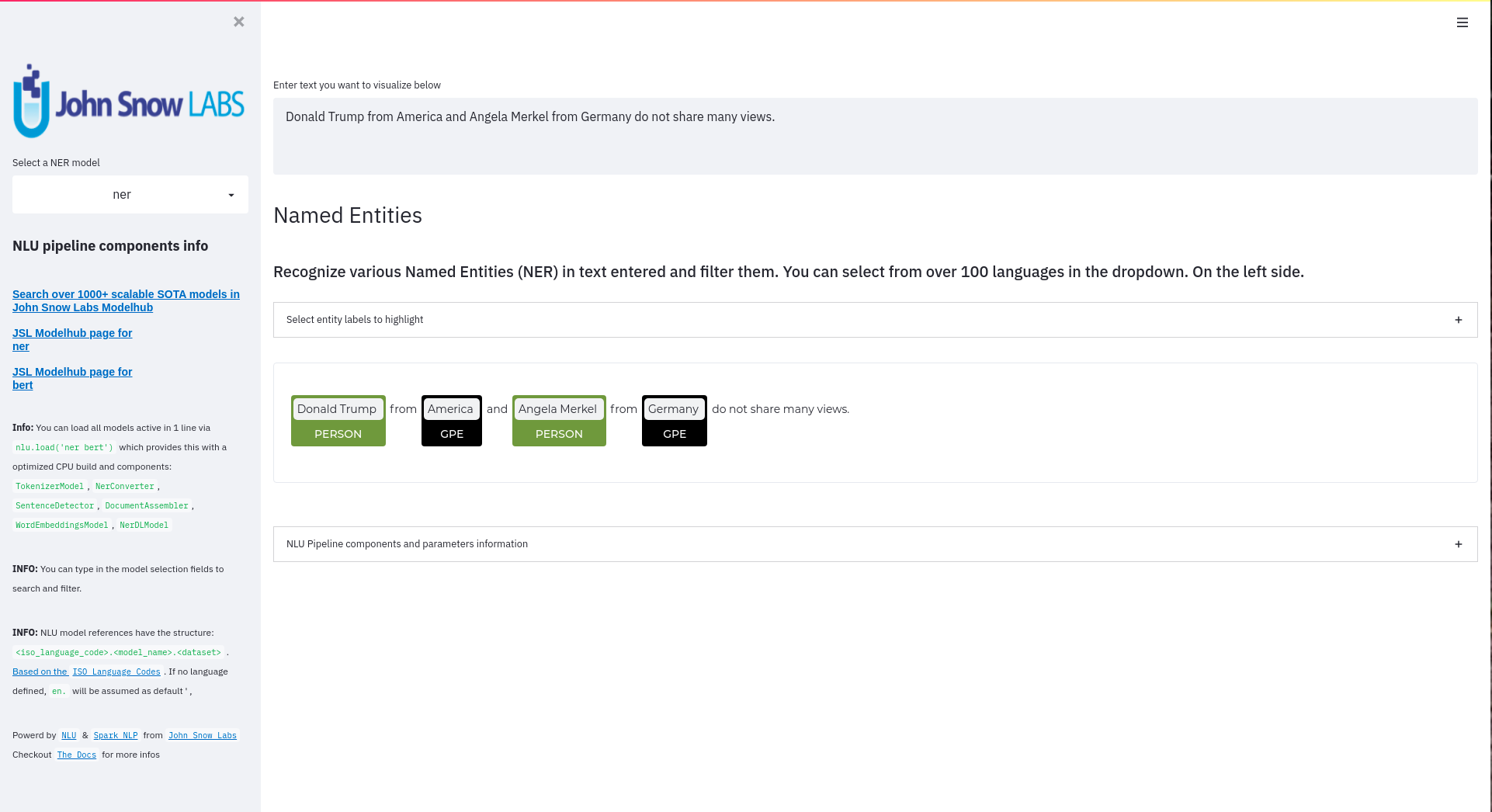

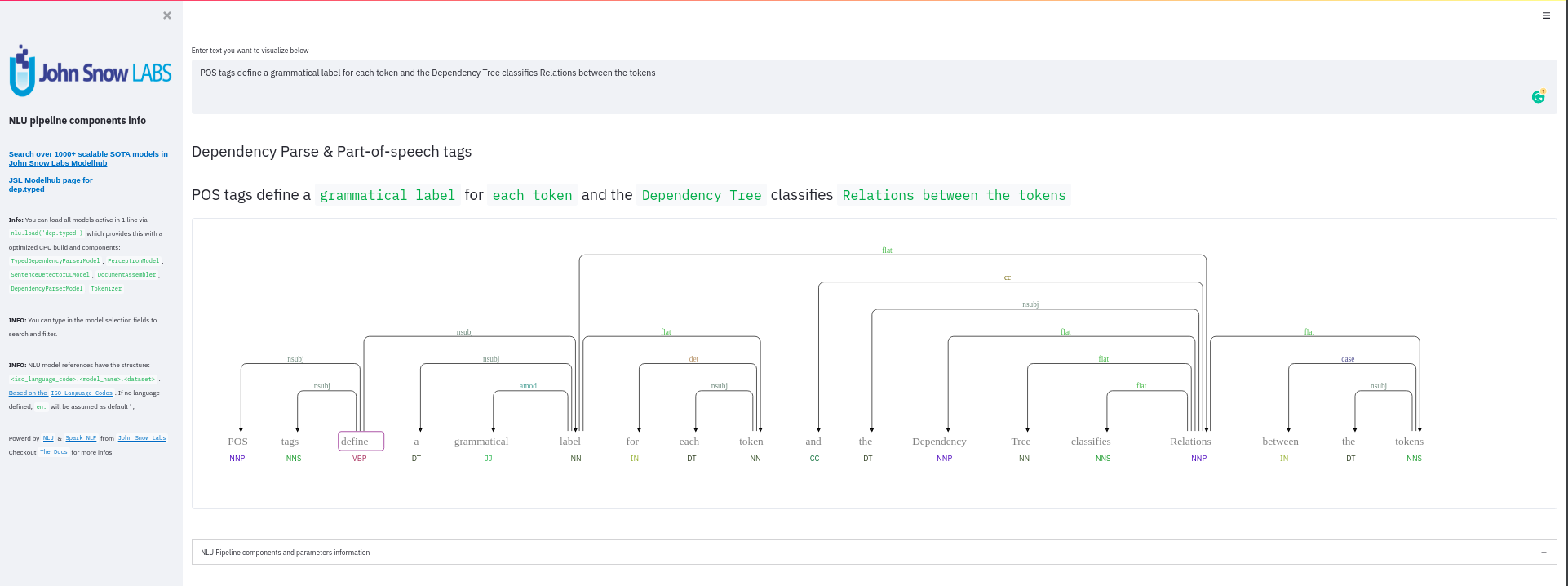

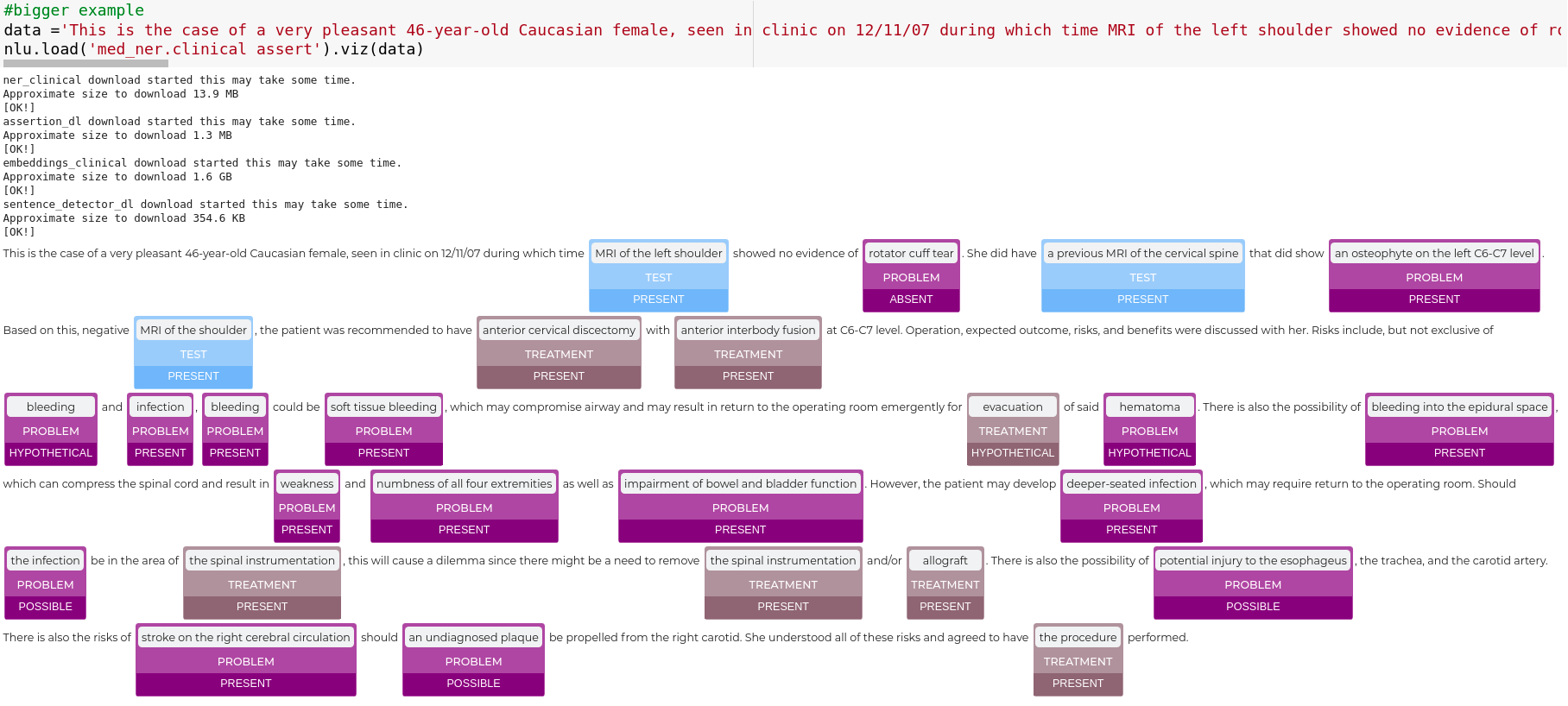

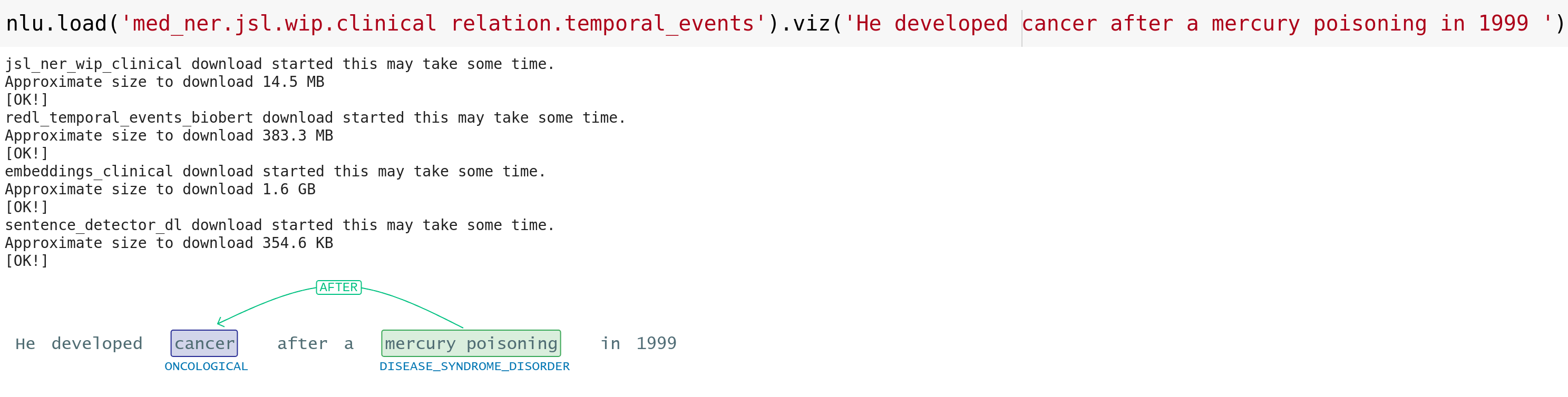

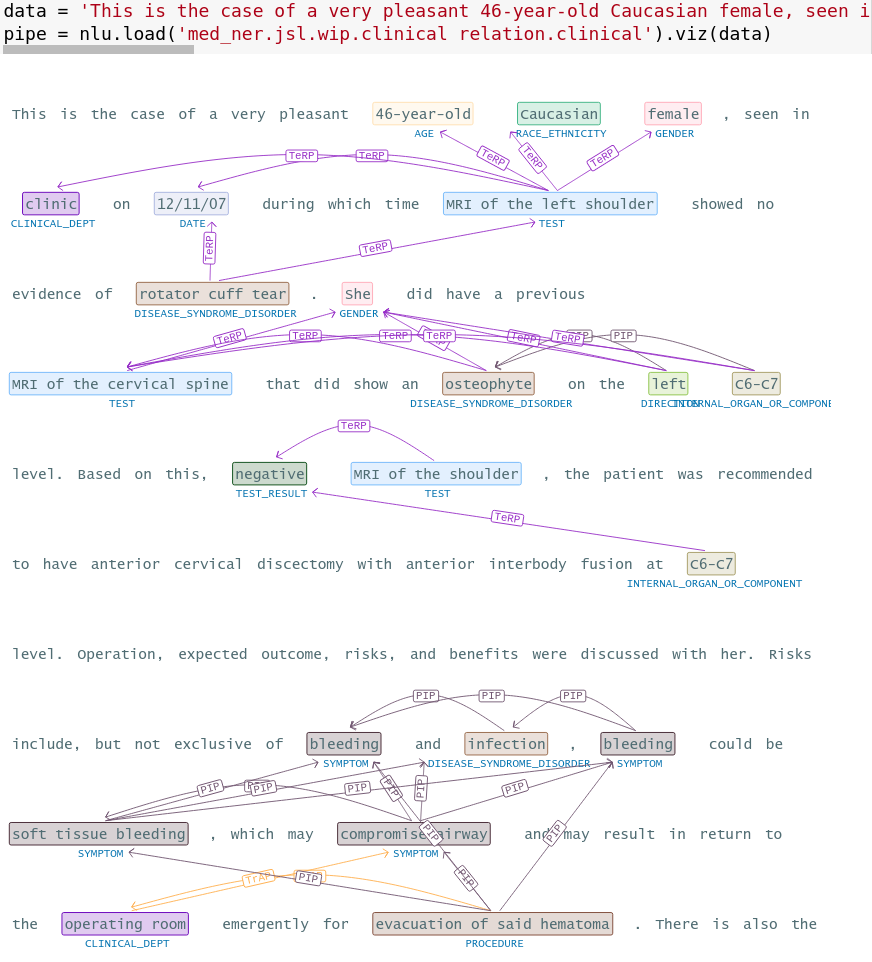

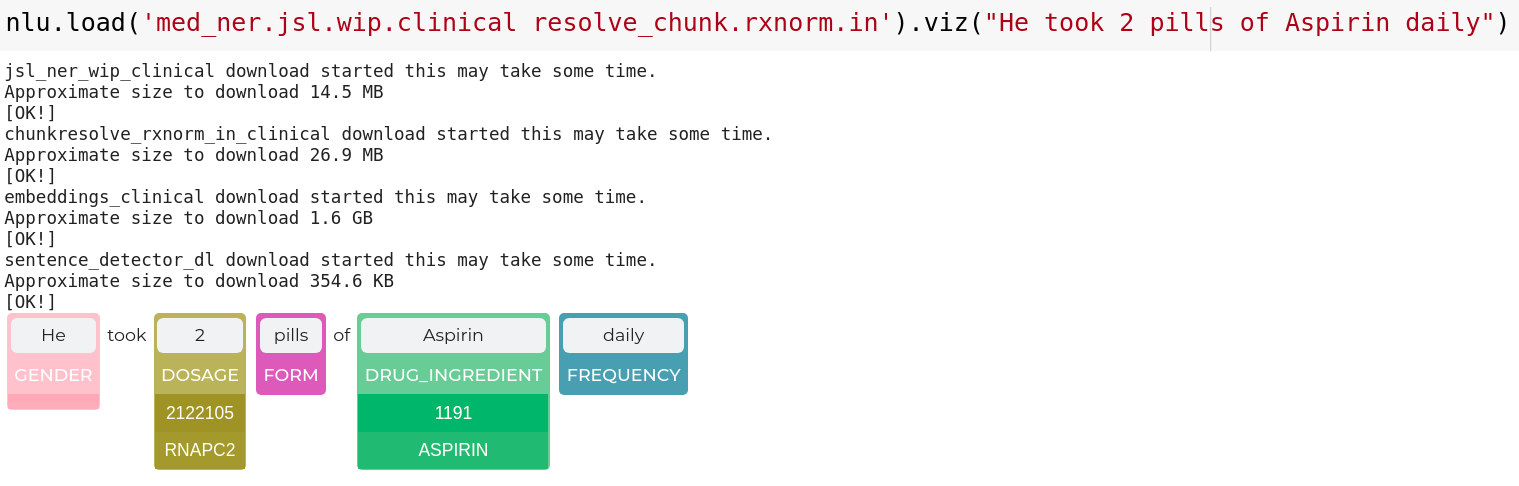

nlu.viz(pipe,data)

Visualize input data with an already configured Spark NLP pipeline,

for Algorithms of type (Ner,Assertion, Relation, Resolution, Dependency)

using Spark NLP Display

Automatically infers applicable viz type and output columns to use for visualization.

Example:

# works with Pipeline, LightPipeline, PipelineModel,PretrainedPipeline List[Annotator]

ade_pipeline = PretrainedPipeline('explain_clinical_doc_ade', 'en', 'clinical/models')

text = """I have an allergic reaction to vancomycin.

My skin has be itchy, sore throat/burning/itchy, and numbness in tongue and gums.

I would not recommend this drug to anyone, especially since I have never had such an adverse reaction to any other medication."""

nlu.viz(ade_pipeline, text)

returns:

If a pipeline has multiple models candidates that can be used for a viz,

the first Annotator that is vizzable will be used to create viz.

You can specify which type of viz to create with the viz_type parameter

Output columns to use for the viz are automatically deducted from the pipeline, by using the

first annotator that provides the correct output type for a specific viz.

You can specify which columns to use for a viz by using the

corresponding ner_col, pos_col, dep_untyped_col, dep_typed_col, resolution_col, relation_col, assertion_col, parameters.

nlu.autocomplete_pipeline(pipe)

Auto-Complete a pipeline or single annotator into a runnable pipeline by harnessing NLU’s DAG Autocompletion algorithm and returns it as NLU pipeline.

The standard Spark pipeline is avaiable on the .vanilla_transformer_pipe attribute of the returned nlu pipe

Every Annotator and Pipeline of Annotators defines a DAG of tasks, with various dependencies that must be satisfied in topoligical order.

NLU enables the completion of an incomplete DAG by finding or creating a path between

the very first input node which is almost always is DocumentAssembler/MultiDocumentAssembler

and the very last node(s), which is given by the topoligical sorting the iterable annotators parameter.

Paths are created by resolving input features of annotators to the corrrosponding providers with matching storage references.

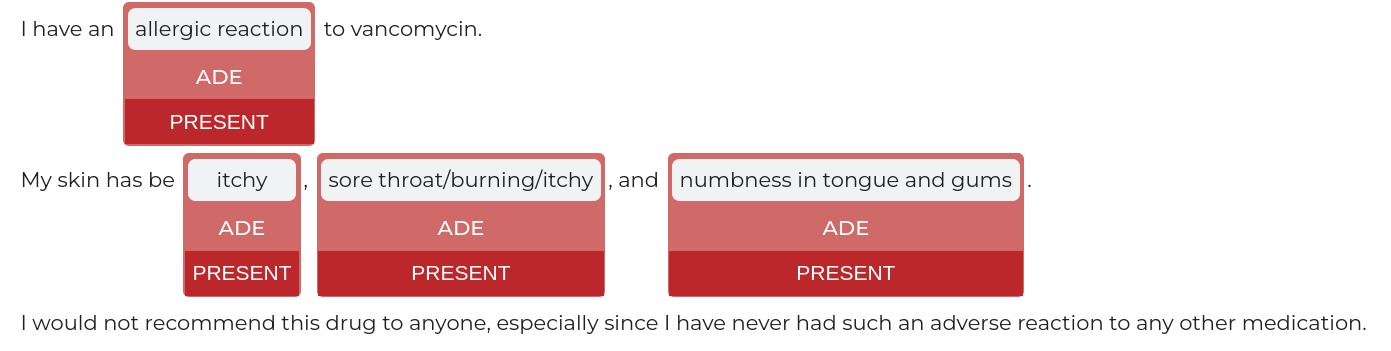

Example:

# Lets autocomplete the pipeline for a RelationExtractionModel, which as many input columns and sub-dependencies.

from sparknlp_jsl.annotator import RelationExtractionModel

re_model = RelationExtractionModel().pretrained("re_ade_clinical", "en", 'clinical/models').setOutputCol('relation')

text = """I have an allergic reaction to vancomycin.

My skin has be itchy, sore throat/burning/itchy, and numbness in tongue and gums.

I would not recommend this drug to anyone, especially since I have never had such an adverse reaction to any other medication."""

nlu_pipe = nlu.autocomplete_pipeline(re_model)

nlu_pipe.predict(text)

returns :

| relation | relation_confidence | relation_entity1 | relation_entity2 | relation_entity2_class |

|---|---|---|---|---|

| 1 | 1 | allergic reaction | vancomycin | Drug_Ingredient |

| 1 | 1 | skin | itchy | Symptom |

| 1 | 0.99998 | skin | sore throat/burning/itchy | Symptom |

| 1 | 0.956225 | skin | numbness | Symptom |

| 1 | 0.999092 | skin | tongue | External_body_part_or_region |

| 0 | 0.942927 | skin | gums | External_body_part_or_region |

| 1 | 0.806327 | itchy | sore throat/burning/itchy | Symptom |

| 1 | 0.526163 | itchy | numbness | Symptom |

| 1 | 0.999947 | itchy | tongue | External_body_part_or_region |

| 0 | 0.994618 | itchy | gums | External_body_part_or_region |

| 0 | 0.994162 | sore throat/burning/itchy | numbness | Symptom |

| 1 | 0.989304 | sore throat/burning/itchy | tongue | External_body_part_or_region |

| 0 | 0.999969 | sore throat/burning/itchy | gums | External_body_part_or_region |

| 1 | 1 | numbness | tongue | External_body_part_or_region |

| 1 | 1 | numbness | gums | External_body_part_or_region |

| 1 | 1 | tongue | gums | External_body_part_or_region |

nlu.to_pretty_df(pipe,data)

Annotates a Pandas Dataframe/Pandas Series/Numpy Array/Spark DataFrame/Python List strings /Python String

with given Spark NLP pipeline, which is assumed to be complete and runnable and returns it in a pythonic pandas dataframe format.

Example:

# works with Pipeline, LightPipeline, PipelineModel,PretrainedPipeline List[Annotator]

ade_pipeline = PretrainedPipeline('explain_clinical_doc_ade', 'en', 'clinical/models')

text = """I have an allergic reaction to vancomycin.

My skin has be itchy, sore throat/burning/itchy, and numbness in tongue and gums.

I would not recommend this drug to anyone, especially since I have never had such an adverse reaction to any other medication."""

# output is same as nlu.autocomplete_pipeline(re_model).nlu_pipe.predict(text)

nlu.to_pretty_df(ade_pipeline,text)

returns :

| assertion | asserted_entitiy | entitiy_class | assertion_confidence |

|---|---|---|---|

| present | allergic reaction | ADE | 0.998 |

| present | itchy | ADE | 0.8414 |

| present | sore throat/burning/itchy | ADE | 0.9019 |

| present | numbness in tongue and gums | ADE | 0.9991 |

Annotators are grouped internally by NLU into output levels token,sentence, document,chunk and relation

Same level annotators output columns are zipped and exploded together to create the final output df.

Additionally, most keys from the metadata dictionary in the result annotations will be collected and expanded into their own columns in the resulting Dataframe, with special handling for Annotators that encode multiple metadata fields inside of one, seperated by strings like ||| or :::.

Some columns are omitted from metadata to reduce total amount of output columns, these can be re-enabled by setting metadata=True

For a given pipeline output level is automatically set to the last anntators output level by default.

This can be changed by defining to_preddty_df(pipe,text,output_level='my_level' for levels token,sentence, document,chunk and relation .

nlu.to_nlu_pipe(pipe)

Convert a pipeline or list of annotators into a NLU pipeline making .predict() and .viz() avaiable for every Spark NLP pipeline.

Assumes the pipeline is already runnable.

# works with Pipeline, LightPipeline, PipelineModel,PretrainedPipeline List[Annotator]

ade_pipeline = PretrainedPipeline('explain_clinical_doc_ade', 'en', 'clinical/models')

text = """I have an allergic reaction to vancomycin.

My skin has be itchy, sore throat/burning/itchy, and numbness in tongue and gums.

I would not recommend this drug to anyone, especially since I have never had such an adverse reaction to any other medication."""

nlu_pipe = nlu.to_nlu_pipe(ade_pipeline)

# Same output as nlu.to_pretty_df(pipe,text)

nlu_pipe.predict(text)

# same output as nlu.viz(pipe,text)

nlu_pipe.viz(text)

# Acces auto-completed Spark NLP big data pipeline,

nlu_pipe.vanilla_transformer_pipe.transform(spark_df)

returns :

| assertion | asserted_entitiy | entitiy_class | assertion_confidence |

|---|---|---|---|

| present | allergic reaction | ADE | 0.998 |

| present | itchy | ADE | 0.8414 |

| present | sore throat/burning/itchy | ADE | 0.9019 |

| present | numbness in tongue and gums | ADE | 0.9991 |

and

4 new Demo Notebooks

These notebooks showcase some of latest classifier models for Banking Queries, Intents in Text, Question and new s classification

- Notebook for Classification of Banking Queries

- Notebook for Classification of Intent in Texts

- Notebook for classification of Similar Questions

- Notebook for Classification of Questions vs Statements

- Notebook for Classification of News into 4 classes

NLU captures every Annotator of Spark NLP and Spark NLP for healthcare

The entire universe of Annotators in Spark NLP and Spark-NLP for healthcare is now embellished by NLU Components by using generalizable annotation extractors methods and configs internally to support enable the new NLU util methods. The following annotator classes are newly captured:

- AssertionFilterer

- ChunkConverter

- ChunkKeyPhraseExtraction

- ChunkSentenceSplitter

- ChunkFiltererApproach

- ChunkFilterer

- ChunkMapperApproach

- ChunkMapperFilterer

- DocumentLogRegClassifierApproach

- DocumentLogRegClassifierModel

- ContextualParserApproach

- ReIdentification

- NerDisambiguator

- NerDisambiguatorModel

- AverageEmbeddings

- EntityChunkEmbeddings

- ChunkMergeApproach

- ChunkMergeApproach

- IOBTagger

- NerChunker

- NerConverterInternalModel

- DateNormalizer

- PosologyREModel

- RENerChunksFilter

- ResolverMerger

- AnnotationMerger

- Router

- Word2VecApproach

- WordEmbeddings

- EntityRulerApproach

- EntityRulerModel

- TextMatcherModel

- BigTextMatcher

- BigTextMatcherModel

- DateMatcher

- MultiDateMatcher

- RegexMatcher

- TextMatcher

- NerApproach

- NerCrfApproach

- NerOverwriter

- DependencyParserApproach

- TypedDependencyParserApproach

- SentenceDetectorDLApproach

- SentimentDetector

- ViveknSentimentApproach

- ContextSpellCheckerApproach

- NorvigSweetingApproach

- SymmetricDeleteApproach

- ChunkTokenizer

- ChunkTokenizerModel

- RecursiveTokenizer

- RecursiveTokenizerModel

- Token2Chunk

- WordSegmenterApproach

- GraphExtraction

- Lemmatizer

- Normalizer

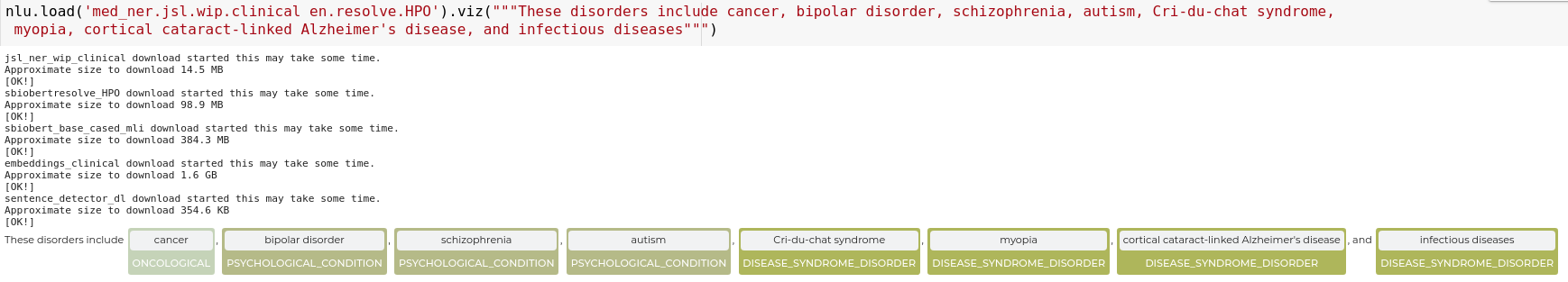

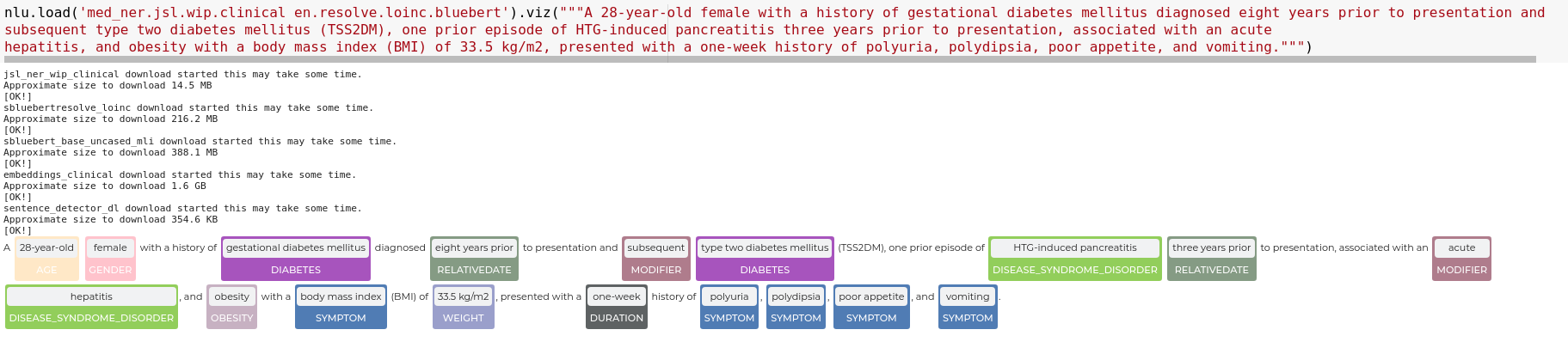

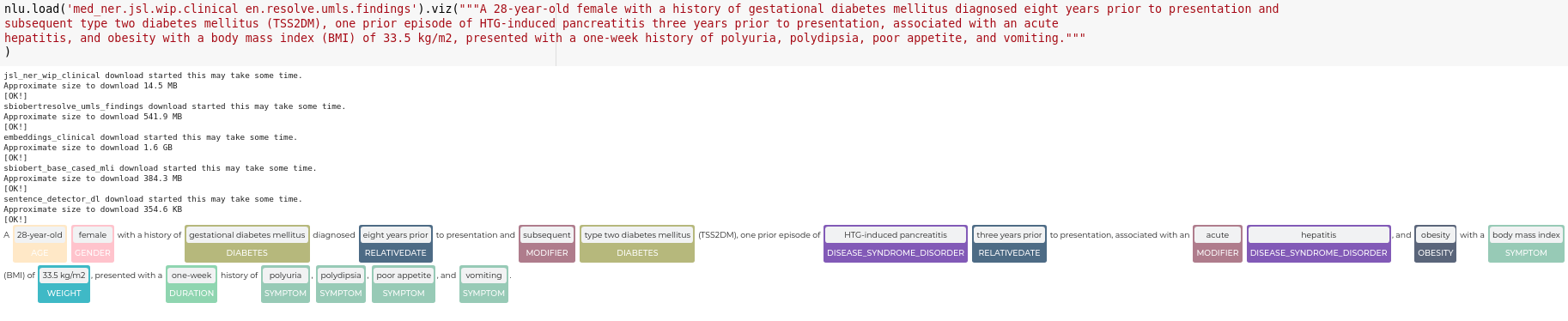

All NLU 4.0 for Healthcare Models

Some examples:

en.rxnorm.umls.mapping

Code:

nlu.load('en.rxnorm.umls.mapping').predict('1161611 315677')

| mapped_entity_umls_code_origin_entity | mapped_entity_umls_code |

|---|---|

| 1161611 | C3215948 |

| 315677 | C0984912 |

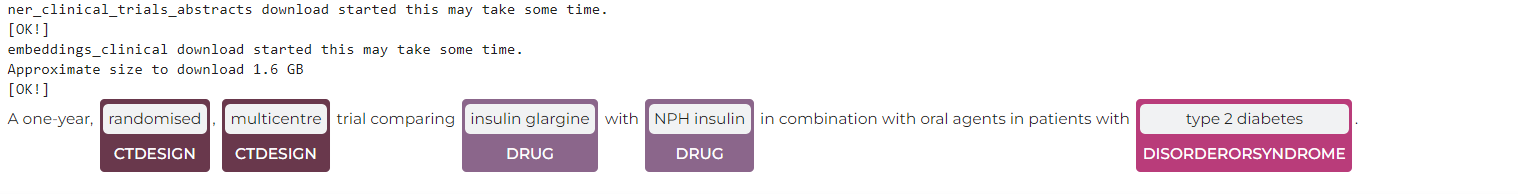

en.ner.clinical_trials_abstracts

Code:

nlu.load('en.ner.clinical_trials_abstracts').predict('A one-year, randomised, multicentre trial comparing insulin glargine with NPH insulin in combination with oral agents in patients with type 2 diabetes.')

Results:

| entities_clinical_trials_abstracts | entities_clinical_trials_abstracts_class | entities_clinical_trials_abstracts_confidence | |

|---|---|---|---|

| 0 | randomised | CTDesign | 0.9996 |

| 0 | multicentre | CTDesign | 0.9998 |

| 0 | insulin glargine | Drug | 0.99135 |

| 0 | NPH insulin | Drug | 0.96875 |

| 0 | type 2 diabetes | DisorderOrSyndrome | 0.999933 |

Code:

nlu.load('en.ner.clinical_trials_abstracts').viz('A one-year, randomised, multicentre trial comparing insulin glargine with NPH insulin in combination with oral agents in patients with type 2 diabetes.')

Results:

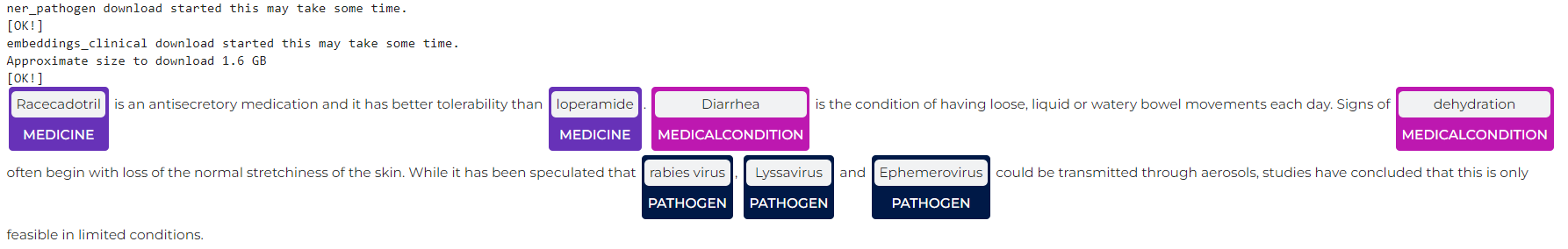

en.med_ner.pathogen

Code:

nlu.load('en.med_ner.pathogen').predict('Racecadotril is an antisecretory medication and it has better tolerability than loperamide. Diarrhea is the condition of having loose, liquid or watery bowel movements each day. Signs of dehydration often begin with loss of the normal stretchiness of the skin. While it has been speculated that rabies virus, Lyssavirus and Ephemerovirus could be transmitted through aerosols, studies have concluded that this is only feasible in limited conditions.')

Results:

| entities_pathogen | entities_pathogen_class | entities_pathogen_confidence | |

|---|---|---|---|

| 0 | Racecadotril | Medicine | 0.9468 |

| 0 | loperamide | Medicine | 0.9987 |

| 0 | Diarrhea | MedicalCondition | 0.9848 |

| 0 | dehydration | MedicalCondition | 0.6307 |

| 0 | rabies virus | Pathogen | 0.95685 |

| 0 | Lyssavirus | Pathogen | 0.9694 |

| 0 | Ephemerovirus | Pathogen | 0.6917 |

Code:

nlu.load('en.med_ner.pathogen').viz('Racecadotril is an antisecretory medication and it has better tolerability than loperamide. Diarrhea is the condition of having loose, liquid or watery bowel movements each day. Signs of dehydration often begin with loss of the normal stretchiness of the skin. While it has been speculated that rabies virus, Lyssavirus and Ephemerovirus could be transmitted through aerosols, studies have concluded that this is only feasible in limited conditions.')

Results:

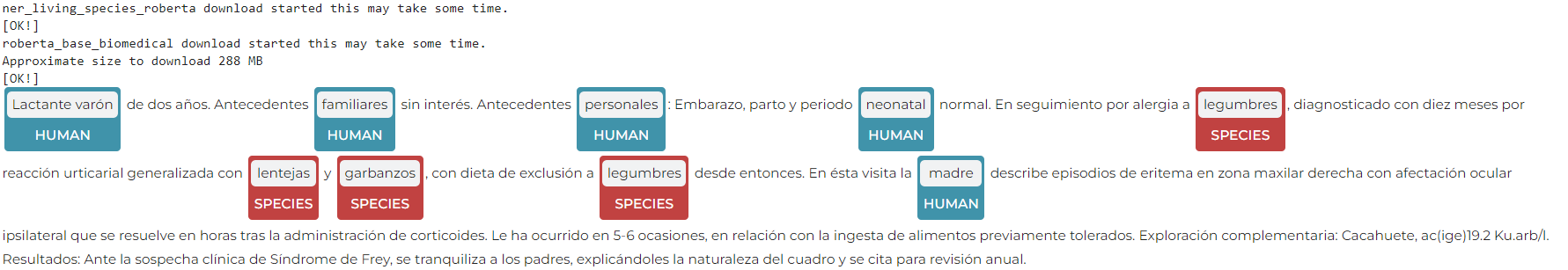

es.med_ner.living_species.roberta

Code:

nlu.load('es.med_ner.living_species.roberta').predict('Lactante varón de dos años. Antecedentes familiares sin interés. Antecedentes personales: Embarazo, parto y periodo neonatal normal. En seguimiento por alergia a legumbres, diagnosticado con diez meses por reacción urticarial generalizada con lentejas y garbanzos, con dieta de exclusión a legumbres desde entonces. En ésta visita la madre describe episodios de eritema en zona maxilar derecha con afectación ocular ipsilateral que se resuelve en horas tras la administración de corticoides. Le ha ocurrido en 5-6 ocasiones, en relación con la ingesta de alimentos previamente tolerados. Exploración complementaria: Cacahuete, ac(ige)19.2 Ku.arb/l. Resultados: Ante la sospecha clínica de Síndrome de Frey, se tranquiliza a los padres, explicándoles la naturaleza del cuadro y se cita para revisión anual.')

Results:

| entities_living_species | entities_living_species_class | entities_living_species_confidence | |

|---|---|---|---|

| 0 | Lactante varón | HUMAN | 0.93175 |

| 0 | familiares | HUMAN | 1 |

| 0 | personales | HUMAN | 1 |

| 0 | neonatal | HUMAN | 0.9997 |

| 0 | legumbres | SPECIES | 0.9962 |

| 0 | lentejas | SPECIES | 0.9988 |

| 0 | garbanzos | SPECIES | 0.9901 |

| 0 | legumbres | SPECIES | 0.9976 |

| 0 | madre | HUMAN | 1 |

| 0 | Cacahuete | SPECIES | 0.998 |

| 0 | padres | HUMAN | 1 |

Code:

nlu.load('es.med_ner.living_species.roberta').viz('Lactante varón de dos años. Antecedentes familiares sin interés. Antecedentes personales: Embarazo, parto y periodo neonatal normal. En seguimiento por alergia a legumbres, diagnosticado con diez meses por reacción urticarial generalizada con lentejas y garbanzos, con dieta de exclusión a legumbres desde entonces. En ésta visita la madre describe episodios de eritema en zona maxilar derecha con afectación ocular ipsilateral que se resuelve en horas tras la administración de corticoides. Le ha ocurrido en 5-6 ocasiones, en relación con la ingesta de alimentos previamente tolerados. Exploración complementaria: Cacahuete, ac(ige)19.2 Ku.arb/l. Resultados: Ante la sospecha clínica de Síndrome de Frey, se tranquiliza a los padres, explicándoles la naturaleza del cuadro y se cita para revisión anual.')

Results:

All healthcare models added in NLU 4.0 :

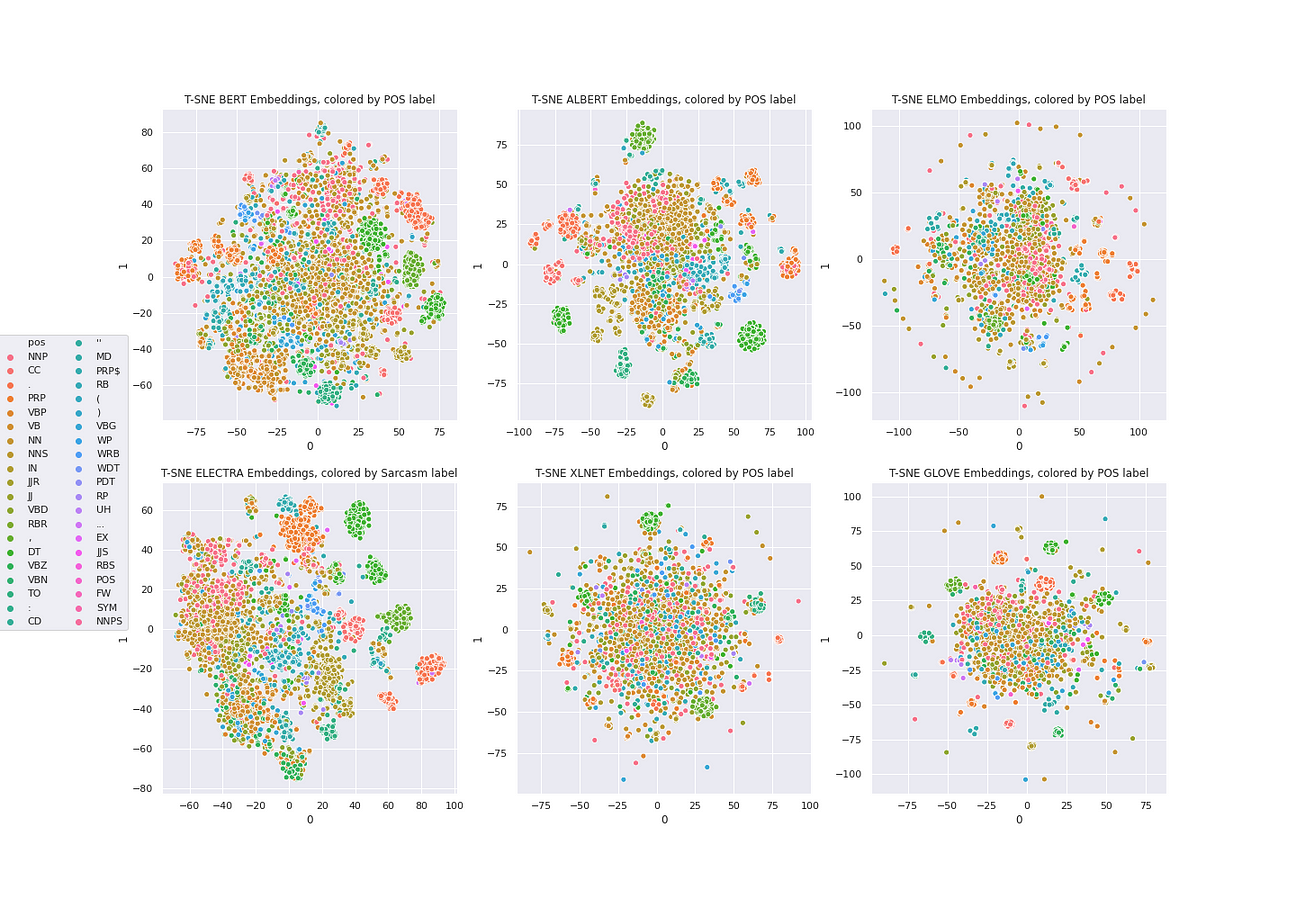

All NLU 4.0 Core Models

All core models added in NLU 4.0 : Can be found on the NLU website because of Github Limitations

Minor Improvements

- IOB Schema Detection for Tokenclassifiers and adding NER Converting in those cases

- Tweaks in column name generation of most annotators

Bug Fixes

- fixed bug in multi lang parsing

- fixed bug for Normalizers

- fixed bug in fetching metadata for resolvers

- fixed bug in deducting outputlevel and inferring output columns

- fixed broken nlp_refs

NLU Version 3.4.4

600 new models with over 75 new languages including Ancient,Dead and Extinct languages, 155 languages total covered, 400% Tokenizer Speedup, 18x USE-Embeddings GPU speedup in John Snow Labs NLU 3.4.4

We are very excited to announce NLU 3.4.4 has been released with over 600 new model, over 75 new languages and 155 languages covered in total,

400% speedup for tokenizers and 18x speedup of UniversalSentenceEncoder on GPU.

On the general NLP side we have transformer based Embeddings and Token Classifiers powered by state of the art CamemBertEmbeddings and DeBertaForTokenClassification based

architectures as well as various new models for

Historical, Ancient,Dead, Extinct, Genetic and Constructed languages like

Old Church Slavonic, Latin, Sanskrit, Esperanto, Volapük, Coptic, Nahuatl, Ancient Greek (to 1453), Old Russian.

On the healthcare side we have Portuguese De-identification Models, have NER models for Gene detection and finally RxNorm Sentence resolution model for mapping and extracting pharmaceutical actions (e.g. analgesic, hypoglycemic)

as well as treatments (e.g. backache, diabetes).

General NLP Models

All general NLP models

First time language models covered

The languages for these models are covered for the very first time ever by NLU.

| Number | Language Name(s) | NLU Reference | Spark NLP Reference | Task | Annotator Class | ISO-639-1 | ISO-639-2/639-5 | ISO-639-3 | Scope | Language Type |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Sanskrit | sa.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | sa | san | san | Individual | Ancient |

| 1 | Sanskrit | sa.lemma | lemma_vedic | Lemmatization | LemmatizerModel | sa | san | san | Individual | Ancient |

| 2 | Sanskrit | sa.pos | pos_vedic | Part of Speech Tagging | PerceptronModel | sa | san | san | Individual | Ancient |

| 3 | Sanskrit | sa.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | sa | san | san | Individual | Ancient |

| 4 | Volapük | vo.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | vo | vol | vol | Individual | Constructed |

| 5 | Nahuatl languages | nah.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nah | nan | Collective | Genetic |

| 6 | Aragonese | an.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | an | arg | arg | Individual | Living |

| 7 | Assamese | as.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | as | asm | asm | Individual | Living |

| 8 | Asturian, Asturleonese, Bable, Leonese | ast.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | ast | ast | Individual | Living |

| 9 | Bashkir | ba.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | ba | bak | bak | Individual | Living |

| 10 | Bavarian | bar.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | bar | Individual | Living |

| 11 | Bishnupriya | bpy.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | bpy | Individual | Living |

| 12 | Burmese | my.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | my | 639-2/T: mya639-2/B: bur | mya | Individual | Living |

| 13 | Cebuano | ceb.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | ceb | ceb | Individual | Living |

| 14 | Central Bikol | bcl.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | bcl | Individual | Living |

| 15 | Chechen | ce.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | ce | che | che | Individual | Living |

| 16 | Chuvash | cv.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | cv | chv | chv | Individual | Living |

| 17 | Corsican | co.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | co | cos | cos | Individual | Living |

| 18 | Dhivehi, Divehi, Maldivian | dv.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | dv | div | div | Individual | Living |

| 19 | Egyptian Arabic | arz.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | arz | Individual | Living |

| 20 | Emiliano-Romagnolo | eml.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | eml | nan | nan | Individual | Living |

| 21 | Erzya | myv.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | myv | myv | Individual | Living |

| 22 | Georgian | ka.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | ka | 639-2/T: kat639-2/B: geo | kat | Individual | Living |

| 23 | Goan Konkani | gom.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | gom | Individual | Living |

| 24 | Javanese | jv.embed.distilbert | distilbert_embeddings_javanese_distilbert_small | Embeddings | DistilBertEmbeddings | jv | jav | jav | Individual | Living |

| 25 | Javanese | jv.embed.javanese_distilbert_small_imdb | distilbert_embeddings_javanese_distilbert_small_imdb | Embeddings | DistilBertEmbeddings | jv | jav | jav | Individual | Living |

| 26 | Javanese | jv.embed.javanese_roberta_small | roberta_embeddings_javanese_roberta_small | Embeddings | RoBertaEmbeddings | jv | jav | jav | Individual | Living |

| 27 | Javanese | jv.embed.javanese_roberta_small_imdb | roberta_embeddings_javanese_roberta_small_imdb | Embeddings | RoBertaEmbeddings | jv | jav | jav | Individual | Living |

| 28 | Javanese | jv.embed.javanese_bert_small_imdb | bert_embeddings_javanese_bert_small_imdb | Embeddings | BertEmbeddings | jv | jav | jav | Individual | Living |

| 29 | Javanese | jv.embed.javanese_bert_small | bert_embeddings_javanese_bert_small | Embeddings | BertEmbeddings | jv | jav | jav | Individual | Living |

| 30 | Kirghiz, Kyrgyz | ky.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | ky | kir | kir | Individual | Living |

| 31 | Letzeburgesch, Luxembourgish | lb.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | lb | ltz | ltz | Individual | Living |

| 32 | Letzeburgesch, Luxembourgish | lb.lemma | lemma_spacylookup | Lemmatization | LemmatizerModel | lb | ltz | ltz | Individual | Living |

| 33 | Letzeburgesch, Luxembourgish | lb.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | lb | ltz | ltz | Individual | Living |

| 34 | Ligurian | lij.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | nan | nan | lij | Individual | Living |

| 35 | Lombard | lmo.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | lmo | Individual | Living |

| 36 | Low German, Low Saxon | nds.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nds | nds | Individual | Living |

| 37 | Macedonian | mk.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | mk | 639-2/T: mkd639-2/B: mac | mkd | Individual | Living |

| 38 | Macedonian | mk.lemma | lemma_spacylookup | Lemmatization | LemmatizerModel | mk | 639-2/T: mkd639-2/B: mac | mkd | Individual | Living |

| 39 | Macedonian | mk.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | mk | 639-2/T: mkd639-2/B: mac | mkd | Individual | Living |

| 40 | Maithili | mai.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | mai | mai | Individual | Living |

| 41 | Manx | gv.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | gv | glv | glv | Individual | Living |

| 42 | Mazanderani | mzn.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | mzn | Individual | Living |

| 43 | Minangkabau | min.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | min | min | Individual | Living |

| 44 | Mingrelian | xmf.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | xmf | Individual | Living |

| 45 | Mirandese | mwl.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | mwl | mwl | Individual | Living |

| 46 | Neapolitan | nap.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nap | nap | Individual | Living |

| 47 | Nepal Bhasa, Newari | new.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | new | new | Individual | Living |

| 48 | Northern Frisian | frr.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | frr | frr | Individual | Living |

| 49 | Northern Sami | sme.lemma | lemma_giella | Lemmatization | LemmatizerModel | se | sme | sme | Individual | Living |

| 50 | Northern Sami | sme.pos | pos_giella | Part of Speech Tagging | PerceptronModel | se | sme | sme | Individual | Living |

| 51 | Northern Sotho, Pedi, Sepedi | nso.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nso | nso | Individual | Living |

| 52 | Occitan (post 1500) | oc.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | oc | oci | oci | Individual | Living |

| 53 | Ossetian, Ossetic | os.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | os | oss | oss | Individual | Living |

| 54 | Pfaelzisch | pfl.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | pfl | Individual | Living |

| 55 | Piemontese | pms.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | pms | Individual | Living |

| 56 | Romansh | rm.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | rm | roh | roh | Individual | Living |

| 57 | Scots | sco.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | sco | sco | Individual | Living |

| 58 | Sicilian | scn.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | scn | scn | Individual | Living |

| 59 | Sinhala, Sinhalese | si.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | si | sin | sin | Individual | Living |

| 60 | Sinhala, Sinhalese | si.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | si | sin | sin | Individual | Living |

| 61 | Sundanese | su.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | su | sun | sun | Individual | Living |

| 62 | Sundanese | su.embed.sundanese_roberta_base | roberta_embeddings_sundanese_roberta_base | Embeddings | RoBertaEmbeddings | su | sun | sun | Individual | Living |

| 63 | Tagalog | tl.lemma | lemma_spacylookup | Lemmatization | LemmatizerModel | tl | tgl | tgl | Individual | Living |

| 64 | Tagalog | tl.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | tl | tgl | tgl | Individual | Living |

| 65 | Tagalog | tl.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | tl | tgl | tgl | Individual | Living |

| 66 | Tagalog | tl.embed.roberta_tagalog_large | roberta_embeddings_roberta_tagalog_large | Embeddings | RoBertaEmbeddings | tl | tgl | tgl | Individual | Living |

| 67 | Tagalog | tl.embed.roberta_tagalog_base | roberta_embeddings_roberta_tagalog_base | Embeddings | RoBertaEmbeddings | tl | tgl | tgl | Individual | Living |

| 68 | Tajik | tg.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | tg | tgk | tgk | Individual | Living |

| 69 | Tatar | tt.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | tt | tat | tat | Individual | Living |

| 70 | Tatar | tt.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | tt | tat | tat | Individual | Living |

| 71 | Tigrinya | ti.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | ti | tir | tir | Individual | Living |

| 72 | Tosk Albanian | als.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | als | Individual | Living |

| 73 | Tswana | tn.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | tn | tsn | tsn | Individual | Living |

| 74 | Turkmen | tk.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | tk | tuk | tuk | Individual | Living |

| 75 | Upper Sorbian | hsb.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | hsb | hsb | Individual | Living |

| 76 | Venetian | vec.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | vec | Individual | Living |

| 77 | Vlaams | vls.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | vls | Individual | Living |

| 78 | Walloon | wa.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | wa | wln | wln | Individual | Living |

| 79 | Waray (Philippines) | war.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | war | war | Individual | Living |

| 80 | Western Armenian | hyw.pos | pos_armtdp | Part of Speech Tagging | PerceptronModel | nan | nan | hyw | Individual | Living |

| 81 | Western Armenian | hyw.lemma | lemma_armtdp | Lemmatization | LemmatizerModel | nan | nan | hyw | Individual | Living |

| 82 | Western Frisian | fy.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | fy | fry | fry | Individual | Living |

| 83 | Western Panjabi | pnb.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | pnb | Individual | Living |

| 84 | Yakut | sah.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | sah | sah | Individual | Living |

| 85 | Zeeuws | zea.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | nan | nan | zea | Individual | Living |

| 86 | Albanian | sq.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | sq | 639-2/T: sqi639-2/B: alb | sqi | Macrolanguage | Living |

| 87 | Albanian | sq.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | sq | 639-2/T: sqi639-2/B: alb | sqi | Macrolanguage | Living |

| 88 | Azerbaijani | az.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | az | aze | aze | Macrolanguage | Living |

| 89 | Azerbaijani | az.stopwords | stopwords_iso | Stop Words Removal | StopWordsCleaner | az | aze | aze | Macrolanguage | Living |

| 90 | Malagasy | mg.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | mg | mlg | mlg | Macrolanguage | Living |

| 91 | Malay (macrolanguage) | ms.embed.albert | albert_embeddings_albert_large_bahasa_cased | Embeddings | AlbertEmbeddings | ms | 639-2/T: msa639-2/B: may | msa | Macrolanguage | Living |

| 92 | Malay (macrolanguage) | ms.embed.distilbert | distilbert_embeddings_malaysian_distilbert_small | Embeddings | DistilBertEmbeddings | ms | 639-2/T: msa639-2/B: may | msa | Macrolanguage | Living |

| 93 | Malay (macrolanguage) | ms.embed.albert_tiny_bahasa_cased | albert_embeddings_albert_tiny_bahasa_cased | Embeddings | AlbertEmbeddings | ms | 639-2/T: msa639-2/B: may | msa | Macrolanguage | Living |

| 94 | Malay (macrolanguage) | ms.embed.albert_base_bahasa_cased | albert_embeddings_albert_base_bahasa_cased | Embeddings | AlbertEmbeddings | ms | 639-2/T: msa639-2/B: may | msa | Macrolanguage | Living |

| 95 | Malay (macrolanguage) | ms.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | ms | 639-2/T: msa639-2/B: may | msa | Macrolanguage | Living |

| 96 | Mongolian | mn.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | mn | mon | mon | Macrolanguage | Living |

| 97 | Oriya (macrolanguage) | or.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | or | ori | ori | Macrolanguage | Living |

| 98 | Pashto, Pushto | ps.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | ps | pus | pus | Macrolanguage | Living |

| 99 | Quechua | qu.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | qu | que | que | Macrolanguage | Living |

| 100 | Sardinian | sc.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | sc | srd | srd | Macrolanguage | Living |

| 101 | Serbo-Croatian | sh.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | sh | nan | nan | Macrolanguage | Living |

| 102 | Uzbek | uz.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel | uz | uzb | uzb | Macrolanguage | Living |

All general NLP models

Powered by the incredible Spark NLP 3.4.4 and previous releases.

All Healthcare

Powered by the amazing Spark NLP for Healthcare 3.5.2 and Spark NLP for Healthcare 3.5.1 releases.

| Number | NLU Reference | Spark NLP Reference | Task | Language Name(s) | Annotator Class | ISO-639-1 | ISO-639-2/639-5 | ISO-639-3 | Language Type | Scope |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | en.med_ner.biomedical_bc2gm | ner_biomedical_bc2gm | Named Entity Recognition | English | MedicalNerModel | en | eng | eng | Living | Individual |

| 1 | en.med_ner.biomedical_bc2gm | ner_biomedical_bc2gm | Named Entity Recognition | English | MedicalNerModel | en | eng | eng | Living | Individual |

| 2 | en.resolve.rxnorm_action_treatment | sbiobertresolve_rxnorm_action_treatment | Entity Resolution | English | SentenceEntityResolverModel | en | eng | eng | Living | Individual |

| 3 | en.classify.token_bert.ner_ade | bert_token_classifier_ner_ade | Named Entity Recognition | English | MedicalBertForTokenClassifier | en | eng | eng | Living | Individual |

| 4 | en.classify.token_bert.ner_ade | bert_token_classifier_ner_ade | Named Entity Recognition | English | MedicalBertForTokenClassifier | en | eng | eng | Living | Individual |

| 5 | pt.med_ner.deid.subentity | ner_deid_subentity | De-identification | Portuguese | MedicalNerModel | pt | por | por | Living | Individual |

| 6 | pt.med_ner.deid.generic | ner_deid_generic | De-identification | Portuguese | MedicalNerModel | pt | por | por | Living | Individual |

| 7 | pt.med_ner.deid | ner_deid_generic | De-identification | Portuguese | MedicalNerModel | pt | por | por | Living | Individual |

NLU Version 3.4.3

Zero-Shot-Relation-Extraction, DeBERTa for Sequence Classification, 150+ new models, 60+ Languages in John Snow Labs NLU 3.4.3

We are very excited to announce NLU 3.4.3 has been released!

This release features new models for Zero-Shot-Relation-Extraction, DeBERTa for Sequence Classification,

Deidentification in French and Italian and

Lemmatizers, Parts of Speech Taggers, and Word2Vec Embeddings for over 66 languages, with 20 languages being covered

for the first time by NLU, including ancient and exotic languages like Ancient Greek, Old Russian,

Old French and much more. Once again we would like to thank our community to make this release possible.

NLU for Healthcare

On the healthcare NLP side, a new ZeroShotRelationExtractionModel is available, which can extract relations between

clinical entities in an unsupervised fashion, no training required!

Additionally, New French and Italian Deidentification models are available for clinical and healthcare domains.

Powerd by the fantastic Spark NLP for helathcare 3.5.0 release

Zero-Shot Relation Extraction

Zero-shot Relation Extraction to extract relations between clinical entities with no training dataset

import nlu

pipe = nlu.load('med_ner.clinical relation.zeroshot_biobert')

# Configure relations to extract

pipe['zero_shot_relation_extraction'].setRelationalCategories({

"CURE": [" cures ."],

"IMPROVE": [" improves .", " cures ."],

"REVEAL": [" reveals ."]})

.setMultiLabel(False)

df = pipe.predict("Paracetamol can alleviate headache or sickness. An MRI test can be used to find cancer.")

df[

'relation', 'relation_confidence', 'relation_entity1', 'relation_entity1_class', 'relation_entity2', 'relation_entity2_class',]

# Results in following table :

| relation | relation_confidence | relation_entity1 | relation_entity1_class | relation_entity2 | relation_entity2_class |

|---|---|---|---|---|---|

| REVEAL | 0.976004 | An MRI test | TEST | cancer | PROBLEM |

| IMPROVE | 0.988195 | Paracetamol | TREATMENT | sickness | PROBLEM |

| IMPROVE | 0.992962 | Paracetamol | TREATMENT | headache | PROBLEM |

New Healthcare Models overview

| Language | NLU Reference | Spark NLP Reference | Task | Annotator Class |

|---|---|---|---|---|

| en | en.relation.zeroshot_biobert | re_zeroshot_biobert | Relation Extraction | ZeroShotRelationExtractionModel |

| fr | fr.med_ner.deid_generic | ner_deid_generic | De-identification | MedicalNerModel |

| fr | fr.med_ner.deid_subentity | ner_deid_subentity | De-identification | MedicalNerModel |

| it | it.med_ner.deid_generic | ner_deid_generic | Named Entity Recognition | MedicalNerModel |

| it | it.med_ner.deid_subentity | ner_deid_subentity | Named Entity Recognition | MedicalNerModel |

NLU general

On the general NLP side we have new transformer based DeBERTa v3 sequence classifiers models fine-tuned in Urdu, French and English for

Sentiment and News classification. Additionally, 100+ Part Of Speech Taggers and Lemmatizers for 66 Languages and for 7

languages new word2vec embeddings, including hi,azb,bo,diq,cy,es,it,

powered by the amazing Spark NLP 3.4.3 release

New Languages covered:

First time languages covered by NLU are :

South Azerbaijani, Tibetan, Dimli, Central Kurdish, Southern Altai,

Scottish Gaelic,Faroese,Literary Chinese,Ancient Greek,

Gothic, Old Russian, Church Slavic,

Old French,Uighur,Coptic,Croatian, Belarusian, Serbian

and their respective ISO-639-3 and ISO 630-2 codes are :

azb,bo,diq,ckb, lt gd, fo,lzh,grc,got,orv,cu,fro,qtd,ug,cop,hr,be,qhe,sr

New NLP Models Overview

| Language | NLU Reference | Spark NLP Reference | Task | Annotator Class |

|---|---|---|---|---|

| en | en.classify.sentiment.imdb.deberta | deberta_v3_xsmall_sequence_classifier_imdb | Text Classification | DeBertaForSequenceClassification |

| en | en.classify.sentiment.imdb.deberta.small | deberta_v3_small_sequence_classifier_imdb | Text Classification | DeBertaForSequenceClassification |

| en | en.classify.sentiment.imdb.deberta.base | deberta_v3_base_sequence_classifier_imdb | Text Classification | DeBertaForSequenceClassification |

| en | en.classify.sentiment.imdb.deberta.large | deberta_v3_large_sequence_classifier_imdb | Text Classification | DeBertaForSequenceClassification |

| en | en.classify.news.deberta | deberta_v3_xsmall_sequence_classifier_ag_news | Text Classification | DeBertaForSequenceClassification |

| en | en.classify.news.deberta.small | deberta_v3_small_sequence_classifier_ag_news | Text Classification | DeBertaForSequenceClassification |

| ur | ur.classify.sentiment.imdb | mdeberta_v3_base_sequence_classifier_imdb | Text Classification | DeBertaForSequenceClassification |

| fr | fr.classify.allocine | mdeberta_v3_base_sequence_classifier_allocine | Text Classification | DeBertaForSequenceClassification |

| ur | ur.embed.bert_cased | bert_embeddings_bert_base_ur_cased | Embeddings | BertEmbeddings |

| fr | fr.embed.bert_5lang_cased | bert_embeddings_bert_base_5lang_cased | Embeddings | BertEmbeddings |

| de | de.embed.medbert | bert_embeddings_German_MedBERT | Embeddings | BertEmbeddings |

| ar | ar.embed.arbert | bert_embeddings_ARBERT | Embeddings | BertEmbeddings |

| bn | bn.embed.bangala_bert | bert_embeddings_bangla_bert_base | Embeddings | BertEmbeddings |

| zh | zh.embed.bert_5lang_cased | bert_embeddings_bert_base_5lang_cased | Embeddings | BertEmbeddings |

| hi | hi.embed.bert_hi_cased | bert_embeddings_bert_base_hi_cased | Embeddings | BertEmbeddings |

| it | it.embed.bert_it_cased | bert_embeddings_bert_base_it_cased | Embeddings | BertEmbeddings |

| ko | ko.embed.bert | bert_embeddings_bert_base | Embeddings | BertEmbeddings |

| tr | tr.embed.bert_cased | bert_embeddings_bert_base_tr_cased | Embeddings | BertEmbeddings |

| vi | vi.embed.bert_cased | bert_embeddings_bert_base_vi_cased | Embeddings | BertEmbeddings |

| hif | hif.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| azb | azb.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| bo | bo.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| diq | diq.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| cy | cy.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| es | es.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| it | it.embed.word2vec | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| af | af.lemma | lemma | Lemmatization | LemmatizerModel |

| lt | lt.lemma | lemma_alksnis | Lemmatization | LemmatizerModel |

| nl | nl.lemma | lemma | Lemmatization | LemmatizerModel |

| gd | gd.lemma | lemma_arcosg | Lemmatization | LemmatizerModel |

| es | es.lemma | lemma | Lemmatization | LemmatizerModel |

| ca | ca.lemma | lemma | Lemmatization | LemmatizerModel |

| el | el.lemma.gdt | lemma_gdt | Lemmatization | LemmatizerModel |

| en | en.lemma.atis | lemma_atis | Lemmatization | LemmatizerModel |

| tr | tr.lemma.boun | lemma_boun | Lemmatization | LemmatizerModel |

| da | da.lemma.ddt | lemma_ddt | Lemmatization | LemmatizerModel |

| cs | cs.lemma.cac | lemma_cac | Lemmatization | LemmatizerModel |

| en | en.lemma.esl | lemma_esl | Lemmatization | LemmatizerModel |

| bg | bg.lemma.btb | lemma_btb | Lemmatization | LemmatizerModel |

| id | id.lemma.csui | lemma_csui | Lemmatization | LemmatizerModel |

| gl | gl.lemma.ctg | lemma_ctg | Lemmatization | LemmatizerModel |

| cy | cy.lemma.ccg | lemma_ccg | Lemmatization | LemmatizerModel |

| fo | fo.lemma.farpahc | lemma_farpahc | Lemmatization | LemmatizerModel |

| tr | tr.lemma.atis | lemma_atis | Lemmatization | LemmatizerModel |

| ga | ga.lemma.idt | lemma_idt | Lemmatization | LemmatizerModel |

| ja | ja.lemma.gsdluw | lemma_gsdluw | Lemmatization | LemmatizerModel |

| es | es.lemma.gsd | lemma_gsd | Lemmatization | LemmatizerModel |

| en | en.lemma.gum | lemma_gum | Lemmatization | LemmatizerModel |

| zh | zh.lemma.gsd | lemma_gsd | Lemmatization | LemmatizerModel |

| lv | lv.lemma.lvtb | lemma_lvtb | Lemmatization | LemmatizerModel |

| hi | hi.lemma.hdtb | lemma_hdtb | Lemmatization | LemmatizerModel |

| pt | pt.lemma.gsd | lemma_gsd | Lemmatization | LemmatizerModel |

| de | de.lemma.gsd | lemma_gsd | Lemmatization | LemmatizerModel |

| nl | nl.lemma.lassysmall | lemma_lassysmall | Lemmatization | LemmatizerModel |

| lzh | lzh.lemma.kyoto | lemma_kyoto | Lemmatization | LemmatizerModel |

| zh | zh.lemma.gsdsimp | lemma_gsdsimp | Lemmatization | LemmatizerModel |

| he | he.lemma.htb | lemma_htb | Lemmatization | LemmatizerModel |

| fr | fr.lemma.gsd | lemma_gsd | Lemmatization | LemmatizerModel |

| ro | ro.lemma.nonstandard | lemma_nonstandard | Lemmatization | LemmatizerModel |

| ja | ja.lemma.gsd | lemma_gsd | Lemmatization | LemmatizerModel |

| it | it.lemma.isdt | lemma_isdt | Lemmatization | LemmatizerModel |

| de | de.lemma.hdt | lemma_hdt | Lemmatization | LemmatizerModel |

| is | is.lemma.modern | lemma_modern | Lemmatization | LemmatizerModel |

| la | la.lemma.ittb | lemma_ittb | Lemmatization | LemmatizerModel |

| fr | fr.lemma.partut | lemma_partut | Lemmatization | LemmatizerModel |

| pcm | pcm.lemma.nsc | lemma_nsc | Lemmatization | LemmatizerModel |

| pl | pl.lemma.pdb | lemma_pdb | Lemmatization | LemmatizerModel |

| grc | grc.lemma.perseus | lemma_perseus | Lemmatization | LemmatizerModel |

| cs | cs.lemma.pdt | lemma_pdt | Lemmatization | LemmatizerModel |

| fa | fa.lemma.perdt | lemma_perdt | Lemmatization | LemmatizerModel |

| got | got.lemma.proiel | lemma_proiel | Lemmatization | LemmatizerModel |

| fr | fr.lemma.rhapsodie | lemma_rhapsodie | Lemmatization | LemmatizerModel |

| it | it.lemma.partut | lemma_partut | Lemmatization | LemmatizerModel |

| en | en.lemma.partut | lemma_partut | Lemmatization | LemmatizerModel |

| no | no.lemma.nynorsklia | lemma_nynorsklia | Lemmatization | LemmatizerModel |

| orv | orv.lemma.rnc | lemma_rnc | Lemmatization | LemmatizerModel |

| cu | cu.lemma.proiel | lemma_proiel | Lemmatization | LemmatizerModel |

| la | la.lemma.perseus | lemma_perseus | Lemmatization | LemmatizerModel |

| fr | fr.lemma.parisstories | lemma_parisstories | Lemmatization | LemmatizerModel |

| fro | fro.lemma.srcmf | lemma_srcmf | Lemmatization | LemmatizerModel |

| vi | vi.lemma.vtb | lemma_vtb | Lemmatization | LemmatizerModel |

| qtd | qtd.lemma.sagt | lemma_sagt | Lemmatization | LemmatizerModel |

| ro | ro.lemma.rrt | lemma_rrt | Lemmatization | LemmatizerModel |

| hu | hu.lemma.szeged | lemma_szeged | Lemmatization | LemmatizerModel |

| ug | ug.lemma.udt | lemma_udt | Lemmatization | LemmatizerModel |

| wo | wo.lemma.wtb | lemma_wtb | Lemmatization | LemmatizerModel |

| cop | cop.lemma.scriptorium | lemma_scriptorium | Lemmatization | LemmatizerModel |

| ru | ru.lemma.syntagrus | lemma_syntagrus | Lemmatization | LemmatizerModel |

| ru | ru.lemma.taiga | lemma_taiga | Lemmatization | LemmatizerModel |

| fr | fr.lemma.sequoia | lemma_sequoia | Lemmatization | LemmatizerModel |

| la | la.lemma.udante | lemma_udante | Lemmatization | LemmatizerModel |

| ro | ro.lemma.simonero | lemma_simonero | Lemmatization | LemmatizerModel |

| it | it.lemma.vit | lemma_vit | Lemmatization | LemmatizerModel |

| hr | hr.lemma.set | lemma_set | Lemmatization | LemmatizerModel |

| fa | fa.lemma.seraji | lemma_seraji | Lemmatization | LemmatizerModel |

| tr | tr.lemma.tourism | lemma_tourism | Lemmatization | LemmatizerModel |

| ta | ta.lemma.ttb | lemma_ttb | Lemmatization | LemmatizerModel |

| sl | sl.lemma.ssj | lemma_ssj | Lemmatization | LemmatizerModel |

| sv | sv.lemma.talbanken | lemma_talbanken | Lemmatization | LemmatizerModel |

| uk | uk.lemma.iu | lemma_iu | Lemmatization | LemmatizerModel |

| te | te.pos | pos_mtg | Part of Speech Tagging | PerceptronModel |

| te | te.pos | pos_mtg | Part of Speech Tagging | PerceptronModel |

| ta | ta.pos | pos_ttb | Part of Speech Tagging | PerceptronModel |

| ta | ta.pos | pos_ttb | Part of Speech Tagging | PerceptronModel |

| cs | cs.pos | pos_ud_pdt | Part of Speech Tagging | PerceptronModel |

| cs | cs.pos | pos_ud_pdt | Part of Speech Tagging | PerceptronModel |

| bg | bg.pos | pos_btb | Part of Speech Tagging | PerceptronModel |

| bg | bg.pos | pos_btb | Part of Speech Tagging | PerceptronModel |

| af | af.pos | pos_afribooms | Part of Speech Tagging | PerceptronModel |

| af | af.pos | pos_afribooms | Part of Speech Tagging | PerceptronModel |

| af | af.pos | pos_afribooms | Part of Speech Tagging | PerceptronModel |

| es | es.pos.gsd | pos_gsd | Part of Speech Tagging | PerceptronModel |

| en | en.pos.ewt | pos_ewt | Part of Speech Tagging | PerceptronModel |

| gd | gd.pos.arcosg | pos_arcosg | Part of Speech Tagging | PerceptronModel |

| el | el.pos.gdt | pos_gdt | Part of Speech Tagging | PerceptronModel |

| hy | hy.pos.armtdp | pos_armtdp | Part of Speech Tagging | PerceptronModel |

| pt | pt.pos.bosque | pos_bosque | Part of Speech Tagging | PerceptronModel |

| tr | tr.pos.framenet | pos_framenet | Part of Speech Tagging | PerceptronModel |

| cs | cs.pos.cltt | pos_cltt | Part of Speech Tagging | PerceptronModel |

| eu | eu.pos.bdt | pos_bdt | Part of Speech Tagging | PerceptronModel |

| et | et.pos.ewt | pos_ewt | Part of Speech Tagging | PerceptronModel |

| da | da.pos.ddt | pos_ddt | Part of Speech Tagging | PerceptronModel |

| cy | cy.pos.ccg | pos_ccg | Part of Speech Tagging | PerceptronModel |

| lt | lt.pos.alksnis | pos_alksnis | Part of Speech Tagging | PerceptronModel |

| nl | nl.pos.alpino | pos_alpino | Part of Speech Tagging | PerceptronModel |

| fi | fi.pos.ftb | pos_ftb | Part of Speech Tagging | PerceptronModel |

| tr | tr.pos.atis | pos_atis | Part of Speech Tagging | PerceptronModel |

| ca | ca.pos.ancora | pos_ancora | Part of Speech Tagging | PerceptronModel |

| gl | gl.pos.ctg | pos_ctg | Part of Speech Tagging | PerceptronModel |

| de | de.pos.gsd | pos_gsd | Part of Speech Tagging | PerceptronModel |

| fr | fr.pos.gsd | pos_gsd | Part of Speech Tagging | PerceptronModel |

| ja | ja.pos.gsdluw | pos_gsdluw | Part of Speech Tagging | PerceptronModel |

| it | it.pos.isdt | pos_isdt | Part of Speech Tagging | PerceptronModel |

| be | be.pos.hse | pos_hse | Part of Speech Tagging | PerceptronModel |

| nl | nl.pos.lassysmall | pos_lassysmall | Part of Speech Tagging | PerceptronModel |

| sv | sv.pos.lines | pos_lines | Part of Speech Tagging | PerceptronModel |

| uk | uk.pos.iu | pos_iu | Part of Speech Tagging | PerceptronModel |

| fr | fr.pos.parisstories | pos_parisstories | Part of Speech Tagging | PerceptronModel |

| en | en.pos.partut | pos_partut | Part of Speech Tagging | PerceptronModel |

| la | la.pos.ittb | pos_ittb | Part of Speech Tagging | PerceptronModel |

| lzh | lzh.pos.kyoto | pos_kyoto | Part of Speech Tagging | PerceptronModel |

| id | id.pos.gsd | pos_gsd | Part of Speech Tagging | PerceptronModel |

| he | he.pos.htb | pos_htb | Part of Speech Tagging | PerceptronModel |

| tr | tr.pos.kenet | pos_kenet | Part of Speech Tagging | PerceptronModel |

| de | de.pos.hdt | pos_hdt | Part of Speech Tagging | PerceptronModel |

| qhe | qhe.pos.hiencs | pos_hiencs | Part of Speech Tagging | PerceptronModel |

| la | la.pos.llct | pos_llct | Part of Speech Tagging | PerceptronModel |

| en | en.pos.lines | pos_lines | Part of Speech Tagging | PerceptronModel |

| pcm | pcm.pos.nsc | pos_nsc | Part of Speech Tagging | PerceptronModel |

| ko | ko.pos.kaist | pos_kaist | Part of Speech Tagging | PerceptronModel |

| pt | pt.pos.gsd | pos_gsd | Part of Speech Tagging | PerceptronModel |

| hi | hi.pos.hdtb | pos_hdtb | Part of Speech Tagging | PerceptronModel |

| is | is.pos.modern | pos_modern | Part of Speech Tagging | PerceptronModel |

| en | en.pos.gum | pos_gum | Part of Speech Tagging | PerceptronModel |

| fro | fro.pos.srcmf | pos_srcmf | Part of Speech Tagging | PerceptronModel |

| sl | sl.pos.ssj | pos_ssj | Part of Speech Tagging | PerceptronModel |

| ru | ru.pos.taiga | pos_taiga | Part of Speech Tagging | PerceptronModel |

| grc | grc.pos.perseus | pos_perseus | Part of Speech Tagging | PerceptronModel |

| sr | sr.pos.set | pos_set | Part of Speech Tagging | PerceptronModel |

| orv | orv.pos.rnc | pos_rnc | Part of Speech Tagging | PerceptronModel |

| ug | ug.pos.udt | pos_udt | Part of Speech Tagging | PerceptronModel |

| got | got.pos.proiel | pos_proiel | Part of Speech Tagging | PerceptronModel |

| sv | sv.pos.talbanken | pos_talbanken | Part of Speech Tagging | PerceptronModel |

| sv | sv.pos.talbanken | pos_talbanken | Part of Speech Tagging | PerceptronModel |

| pl | pl.pos.pdb | pos_pdb | Part of Speech Tagging | PerceptronModel |

| fa | fa.pos.seraji | pos_seraji | Part of Speech Tagging | PerceptronModel |

| tr | tr.pos.penn | pos_penn | Part of Speech Tagging | PerceptronModel |

| hu | hu.pos.szeged | pos_szeged | Part of Speech Tagging | PerceptronModel |

| sk | sk.pos.snk | pos_snk | Part of Speech Tagging | PerceptronModel |

| sk | sk.pos.snk | pos_snk | Part of Speech Tagging | PerceptronModel |

| ro | ro.pos.simonero | pos_simonero | Part of Speech Tagging | PerceptronModel |

| it | it.pos.postwita | pos_postwita | Part of Speech Tagging | PerceptronModel |

| gl | gl.pos.treegal | pos_treegal | Part of Speech Tagging | PerceptronModel |

| cs | cs.pos.pdt | pos_pdt | Part of Speech Tagging | PerceptronModel |

| ro | ro.pos.rrt | pos_rrt | Part of Speech Tagging | PerceptronModel |

| orv | orv.pos.torot | pos_torot | Part of Speech Tagging | PerceptronModel |

| hr | hr.pos.set | pos_set | Part of Speech Tagging | PerceptronModel |

| la | la.pos.proiel | pos_proiel | Part of Speech Tagging | PerceptronModel |

| fr | fr.pos.partut | pos_partut | Part of Speech Tagging | PerceptronModel |

| it | it.pos.vit | pos_vit | Part of Speech Tagging | PerceptronModel |

Bugfixes

- Improved Error Messages and integrated detection and stopping of endless loops which could occur during construction of nlu pipelines

Additional NLU resources

- 140+ NLU Tutorials

- NLU in Action

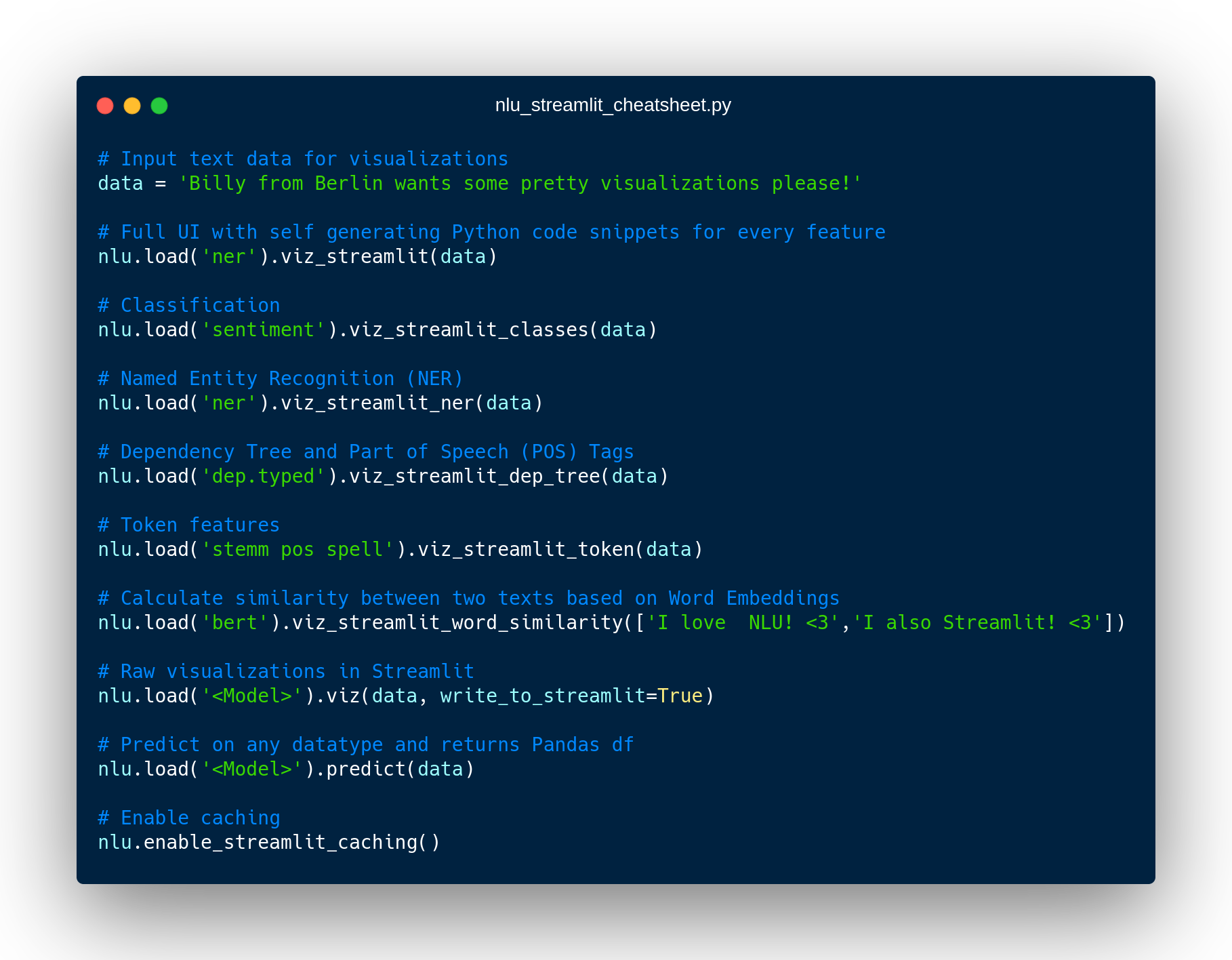

- Streamlit visualizations docs

- The complete list of all 4000+ models & pipelines in 200+ languages is available on Models Hub.

- Spark NLP publications

- NLU documentation

- Discussions Engage with other community members, share ideas, and show off how you use Spark NLP and NLU!

Install NLU in 1 line!

* Install NLU on Google Colab : !wget https://setup.johnsnowlabs.com/nlu/colab.sh -O - | bash

* Install NLU on Kaggle : !wget https://setup.johnsnowlabs.com/nlu/kaggle.sh -O - | bash

* Install NLU via Pip : ! pip install nlu pyspark streamlit==0.80.0`

NLU Version 3.4.2

Multilingual DeBERTa Transformer Embeddings for 100+ Languages, Spanish Deidentification and NER for Randomized Clinical Trials - John Snow Labs NLU 3.4.2

We are very excited NLU 3.4.2 has been released. On the open source side we have 5 new DeBERTa Transformer models for English and Multi-Lingual for 100+ languages. DeBERTa improves over BERT and RoBERTa by introducing two novel techniques.

For the healthcare side we have new NER models for randomized clinical trials (RCT) which can detect entities of type

BACKGROUND, CONCLUSIONS, METHODS, OBJECTIVE, RESULTS from clinical text.

Additionally, new Spanish Deidentification NER models for entities like STATE, PATIENT, DEVICE, COUNTRY, ZIP, PHONE, HOSPITAL and many more.

New Open Source Models

Integrates models from Spark NLP 3.4.2 release

| Language | NLU Reference | Spark NLP Reference | Task | Annotator Class |

|---|---|---|---|---|

| en | en.embed.deberta_v3_xsmall | deberta_v3_xsmall | Embeddings | DeBertaEmbeddings |

| en | en.embed.deberta_v3_small | deberta_v3_small | Embeddings | DeBertaEmbeddings |

| en | en.embed.deberta_v3_base | deberta_v3_base | Embeddings | DeBertaEmbeddings |

| en | en.embed.deberta_v3_large | deberta_v3_large | Embeddings | DeBertaEmbeddings |

| xx | xx.embed.mdeberta_v3_base | mdeberta_v3_base | Embeddings | DeBertaEmbeddings |

New Healthcare Models

Integrates models from Spark NLP For Healthcare 3.4.2 release

| Language | NLU Reference | Spark NLP Reference | Task | Annotator Class |

|---|---|---|---|---|

| en | en.med_ner.clinical_trials | bert_sequence_classifier_rct_biobert | Text Classification | MedicalBertForSequenceClassification |

| es | es.med_ner.deid.generic.roberta | ner_deid_generic_roberta_augmented | De-identification | MedicalNerModel |

| es | es.med_ner.deid.subentity.roberta | ner_deid_subentity_roberta_augmented | De-identification | MedicalNerModel |

| en | en.med_ner.deid.generic_augmented | ner_deid_generic_augmented | [‘Named Entity Recognition’, ‘De-identification’] | MedicalNerModel |

| en | en.med_ner.deid.subentity_augmented | ner_deid_subentity_augmented | [‘Named Entity Recognition’, ‘De-identification’] | MedicalNerModel |

Additional NLU resources

- 140+ NLU Tutorials

- NLU in Action

- Streamlit visualizations docs

- The complete list of all 4000+ models & pipelines in 200+ languages is available on Models Hub.

- Spark NLP publications

- NLU documentation

- Discussions Engage with other community members, share ideas, and show off how you use Spark NLP and NLU!

Install NLU in 1 line!

* Install NLU on Google Colab : !wget https://setup.johnsnowlabs.com/nlu/colab.sh -O - | bash

* Install NLU on Kaggle : !wget https://setup.johnsnowlabs.com/nlu/kaggle.sh -O - | bash

* Install NLU via Pip : ! pip install nlu pyspark streamlit==0.80.0`

NLU Version 3.4.1

22 New models for 23 languages including various African and Indian languages, Medical Spanish models and more in NLU 3.4.1

We are very excited to announce the release of NLU 3.4.1 which features 22 new models for 23 languages where the The open-source side covers new Embeddings for Vietnamese and English Clinical domains and Multilingual Embeddings for 12 Indian and 9 African Languages. Additionally, there are new Sequence classifiers for Multilingual NER for 9 African languages, German Sentiment Classifiers and English Emotion and Typo Classifiers. The healthcare side covers Medical Spanish models, Classifiers for Drugs, Gender, the Pico Framework, and Relation Extractors for Adverse Drug events and Temporality. Finally, Spark 3.2.X is now supported and bugs related to Databricks environments have been fixed.

General NLU Improvements

- Support for Spark 3.2.x

New Open Source Models

Based on the amazing 3.4.1 Spark NLP Release integrates new Multilingual embeddings for 12 Major Indian languages, embeddings for Vietnamese, French, and English Clinical domains. Additionally new Multilingual NER model for 9 African languages, English 6 Class Emotion classifier and Typo detectors.

New Embeddings

- Multilingual ALBERT - IndicBert model pretrained exclusively on 12 major Indian languages with size smaller and performance on par or better than competing models. Languages covered are Assamese, Bengali, English, Gujarati, Hindi, Kannada, Malayalam, Marathi, Oriya, Punjabi, Tamil, Telugu. Available with xx.embed.albert.indic

- Fine tuned Vietnamese DistilBERT Base cased embeddings. Available with vi.embed.distilbert.cased

- Clinical Longformer Embeddings which consistently out-performs ClinicalBERT for various downstream tasks and on datasets. Available with en.embed.longformer.clinical

- Fine tuned Static French Word2Vec Embeddings in 3 sizes, 200d, 300d and 100d. Available with fr.embed.word2vec_wiki_1000, fr.embed.word2vec_wac_200 and fr.embed.w2v_cc_300d

New Transformer based Token and Sequence Classifiers

- Multilingual NER Distilbert model which detects entities

DATE,LOC,ORG,PERfor the languages 9 African languages (Hausa, Igbo, Kinyarwanda, Luganda, Nigerian, Pidgin, Swahili, Wolof, and Yorùbá). Available with xx.ner.masakhaner.distilbert - German News Sentiment Classifier available with de.classify.news_sentiment.bert

- English Emotion Classifier for 6 Classes available with en.classify.emotion.bert

- **English Typo Detector **: available with en.classify.typos.distilbert

| Language | NLU Reference | Spark NLP Reference | Task | Annotator Class |

|---|---|---|---|---|

| xx | xx.embed.albert.indic | albert_indic | Embeddings | AlbertEmbeddings |

| xx | xx.ner.masakhaner.distilbert | xlm_roberta_large_token_classifier_masakhaner | Named Entity Recognition | DistilBertForTokenClassification |

| en | en.embed.longformer.clinical | clinical_longformer | Embeddings | LongformerEmbeddings |

| en | en.classify.emotion.bert | bert_sequence_classifier_emotion | Text Classification | BertForSequenceClassification |

| de | de.classify.news_sentiment.bert | bert_sequence_classifier_news_sentiment | Sentiment Analysis | BertForSequenceClassification |

| en | en.classify.typos.distilbert | distilbert_token_classifier_typo_detector | Named Entity Recognition | DistilBertForTokenClassification |

| fr | fr.embed.word2vec_wiki_1000 | word2vec_wiki_1000 | Embeddings | WordEmbeddingsModel |

| fr | fr.embed.word2vec_wac_200 | word2vec_wac_200 | Embeddings | WordEmbeddingsModel |

| fr | fr.embed.w2v_cc_300d | w2v_cc_300d | Embeddings | WordEmbeddingsModel |

| vi | vi.embed.distilbert.cased | distilbert_base_cased | Embeddings | DistilBertEmbeddings |

New Healthcare Models

Integrated from the amazing 3.4.1 Spark NLP For Healthcare Release.

which makes 2 new Annotator Classes available, MedicalBertForSequenceClassification and MedicalDistilBertForSequenceClassification,

various medical Spanish models, RxNorm Resolvers,

Transformer based sequence classifiers for Drugs, Gender and the PICO framework,

and Relation extractors for Temporality and Causality of Drugs and Adverse Events.

New Medical Spanish Models

- Spanish Word2Vec Embeddings available with es.embed.sciwiki_300d

- Spanish PHI Deidentification NER models with two different subsets of entities extracted, available with ner_deid_generic and ner_deid_subentity

New Resolvers

- RxNorm resolvers with augmented concept data available with en.med_ner.supplement_clinical

New Transformer based Sequence Classifiers

- Adverse Drug Event Classifier Biobert based available with en.classify.ade.seq_biobert

- Patient Gender Classifier Biobert and Distilbert based available with en.classify.gender.seq_biobert and available with en.classify.ade.seq_distilbert

- PiCO Framework Classifier available with en.classify.pico.seq_biobert

New Relation Extractors

- Temporal Relation Extractor available with en.relation.temporal_events_clinical

- Adverse Drug Event Relation Extractors one version Biobert Embeddings and one non-DL version available with en.relation.adverse_drug_events.clinical available with en.relation.adverse_drug_events.clinical.biobert

Bugfixes

- Fixed bug that caused non-default output level of components to be sentence

- Fixed a bug that caused nlu references pointing to pretrained pipelines in spark nlp to crash in Databricks environments

Additional NLU resources

- 140+ NLU Tutorials

- NLU in Action

- Streamlit visualizations docs

- The complete list of all 4000+ models & pipelines in 200+ languages is available on Models Hub.

- Spark NLP publications

- NLU documentation

- Discussions Engage with other community members, share ideas, and show off how you use Spark NLP and NLU!

Install NLU in 1 line!

* Install NLU on Google Colab : !wget https://setup.johnsnowlabs.com/nlu/colab.sh -O - | bash

* Install NLU on Kaggle : !wget https://setup.johnsnowlabs.com/nlu/kaggle.sh -O - | bash

* Install NLU via Pip : ! pip install nlu pyspark streamlit==0.80.0`

NLU Version 3.4.0

1 line to OCR for images, PDFS and DOCX, Text Generation with GPT2 and new T5 models, Sequence Classification with XlmRoBerta, RoBerta, Xlnet, Longformer and Albert, Transformer based medical NER with MedicalBertForTokenClassifier, 80 new models, 20+ new languages including various African and Scandinavian and much more in John Snow Labs NLU 3.4.0 !

We are incredibly excited to announce John Snow Labs NLU 3.4.0 has been released!

This release features 11 new annotator classes and 80 new models, including 3 OCR Transformers which enable you to extract text

from various file types, support for GPT2 and new pretrained T5 models for Text Generation and dozens more of new transformer based models

for Token and Sequence Classification.

This includes 8 new Sequence classifier models which can be pretrained in Huggingface and imported into Spark NLP and NLU.

Finally, the NLU tutorial page of the 140+ notebooks has been updated

New NLU OCR Features

3 new OCR based spells are supported, which enable extracting text from files of type

JPEG, PNG, BMP, WBMP, GIF, JPG, TIFF, DOCX, PDF in just 1 line of code.

You need a Spark OCR license for using these, which is available for free here and refer to the new

OCR tutorial notebook

Find more details on the NLU OCR documentation page

New NLU Healthcare Features

The healthcare side features a new MedicalBertForTokenClassifier annotator which is a Bert based model for token classification problems like Named Entity Recognition,

Parts of Speech and much more. Overall there are 28 new models which include German De-Identification models, English NER models for extracting Drug Development Trials,

Clinical Abbreviations and Acronyms, NER models for chemical compounds/drugs and genes/proteins, updated MedicalBertForTokenClassifier NER models for the medical domains Adverse drug Events,

Anatomy, Chemicals, Genes,Proteins, Cellular/Molecular Biology, Drugs, Bacteria, De-Identification and general Medical and Clinical Named Entities.

For Entity Relation Extraction between entity pairs new models for interaction between Drugs and Proteins.

For Entity Resolution new models for resolving Clinical Abbreviations and Acronyms to their full length names and also a model for resolving Drug Substance Entities to the categories

Clinical Drug, Pharmacologic Substance, Antibiotic, Hazardous or Poisonous Substance and new resolvers for LOINC and SNOMED terminologies.

New NLU Open source Features

On the open source side we have new support for Open Ai’s GPT2 for various text sequence to sequence problems and

additionally the following new Transformer models are supported :

RoBertaForSequenceClassification, XlmRoBertaForSequenceClassification, LongformerForSequenceClassification,

AlbertForSequenceClassification, XlnetForSequenceClassification, Word2Vec with various pre-trained weights for various problems!

New GPT2 models for generating text conditioned on some input,

New T5 style transfer models for active to passive, formal to informal, informal to formal, passive to active sequence to sequence generation.

Additionally, a new T5 model for generating SQL code from natural language input is provided.

On top of this dozens new Transformer based Sequence Classifiers and Token Classifiers have been released, this is includes for Token Classifier the following models :

Multi-Lingual general NER models for 10 African Languages (Amharic, Hausa, Igbo, Kinyarwanda, Luganda, Nigerian, Pidgin, Swahilu, Wolof, and Yorùbá),

10 high resourced languages (10 high resourced languages (Arabic, German, English, Spanish, French, Italian, Latvian, Dutch, Portuguese and Chinese),

6 Scandinavian languages (Danish, Norwegian-Bokmål, Norwegian-Nynorsk, Swedish, Icelandic, Faroese) ,

Uni-Lingual NER models for general entites in the language Chinese, Hindi, Islandic, Indonesian

and finally English NER models for extracting entities related to Stocks Ticker Symbols, Restaurants, Time.

For Sequence Classification new models for classifying Toxicity in Russian text and English models for

Movie Reviews, News Categorization, Sentimental Tone and General Sentiment

New NLU OCR Models

The following Transformers have been integrated from Spark OCR

| NLU Spell | Transformer Class |

|---|---|

nlu.load(img2text) |

ImageToText |

nlu.load(pdf2text) |

PdfToText |

nlu.load(doc2text) |

DocToText |

New Open Source Models

Integration for the 49 new models from the colossal Spark NLP 3.4.0 release

New Healthcare Models

Integration for the 28 new models from the amazing Spark NLP for healthcare 3.4.0 release

Additional NLU resources

- NLU OCR tutorial notebook

- 140+ NLU Tutorials

- NLU in Action

- Streamlit visualizations docs

- The complete list of all 4000+ models & pipelines in 200+ languages is available on Models Hub.

- Spark NLP publications

- NLU documentation

- Discussions Engage with other community members, share ideas, and show off how you use Spark NLP and NLU!

Install NLU in 1 line!

* Install NLU on Google Colab : !wget https://setup.johnsnowlabs.com/nlu/colab.sh -O - | bash

* Install NLU on Kaggle : !wget https://setup.johnsnowlabs.com/nlu/kaggle.sh -O - | bash

* Install NLU via Pip : ! pip install nlu pyspark streamlit==0.80.0`

NLU Version 3.3.1

48 new Transformer based models in 9 new languages, including NER for Finance, Industry, Politcal Policies, COVID and Chemical Trials, various clinical and medical domains in Spanish and English and much more in NLU 3.3.1

We are incredibly excited to announce NLU 3.3.1 has been released with 48 new models in 9 languages!

It comes with 2 new types of state-of-the-art models,distilBERT and BERT for sequence classification with various pre-trained weights,

state-of-the-art bert based classifiers for problems in the domains of Finance, Sentiment Classification, Industry, News, and much more.

On the healthcare side, NLU features 22 new models in for English and Spanish with

with entity Resolver Models for LOINC, MeSH, NDC and SNOMED and UMLS Diseases,

NER models for Biomarkers, NIHSS-Guidelines, COVID Trials , Chemical Trials,

Bert based Token Classifier models for biological, genetical,cancer, cellular terms,